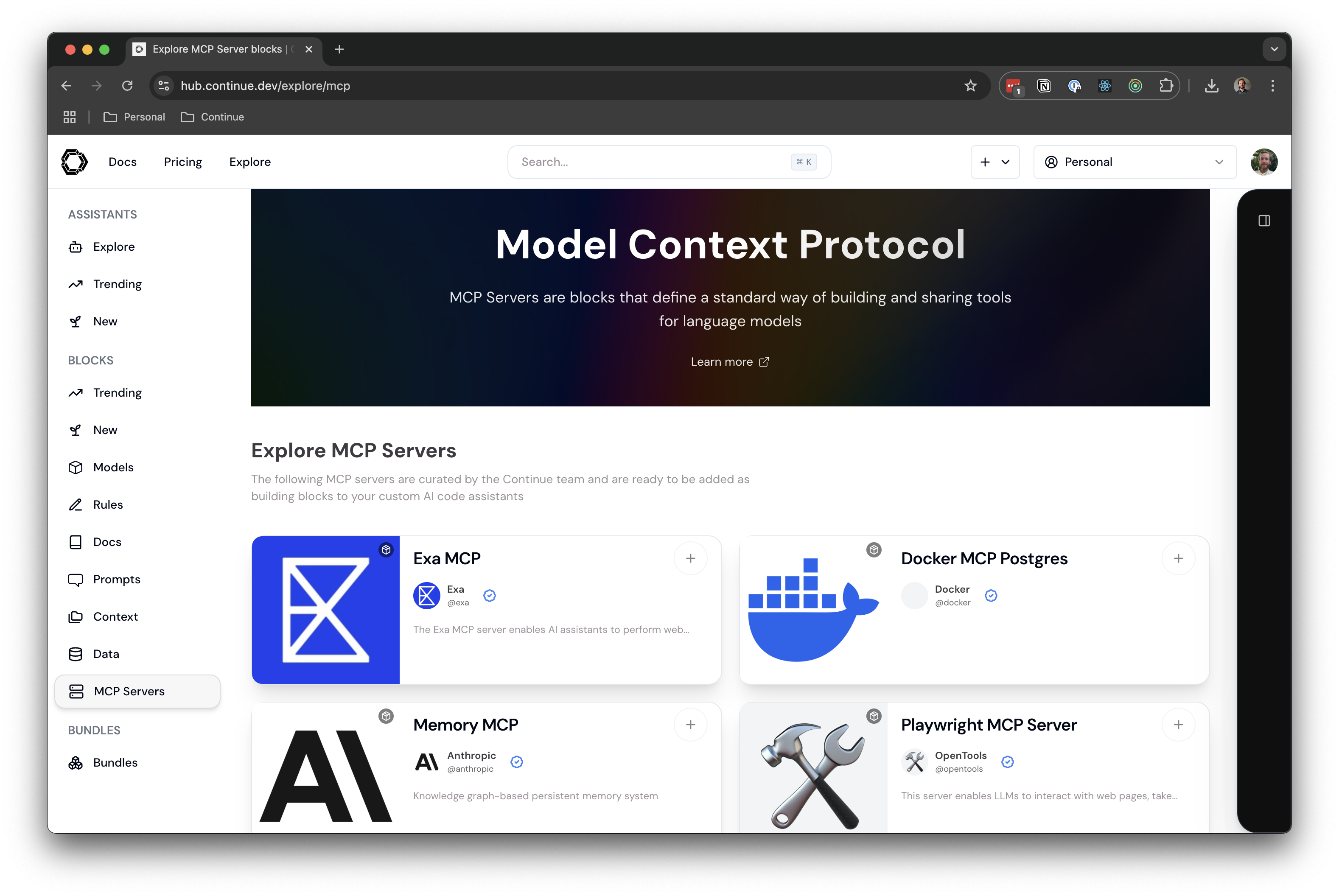

# MCP Blocks

Source: https://docs.continue.dev/customization/mcp-tools

Model Context Protocol servers provide specialized functionality:

* **Enable integration** with external tools and systems

* **Create extensible interfaces** for custom capabilities

* **Support complex interactions** with your development environment

* **Allow partners** to contribute specialized functionality

* **Connect to databases** to understand schema and data models during development

## Learn More

Learn more in the [MCP deep dive](/customization/mcp-tools), and view [`mcpServers`](/reference#mcpservers) in the YAML Reference for more details.

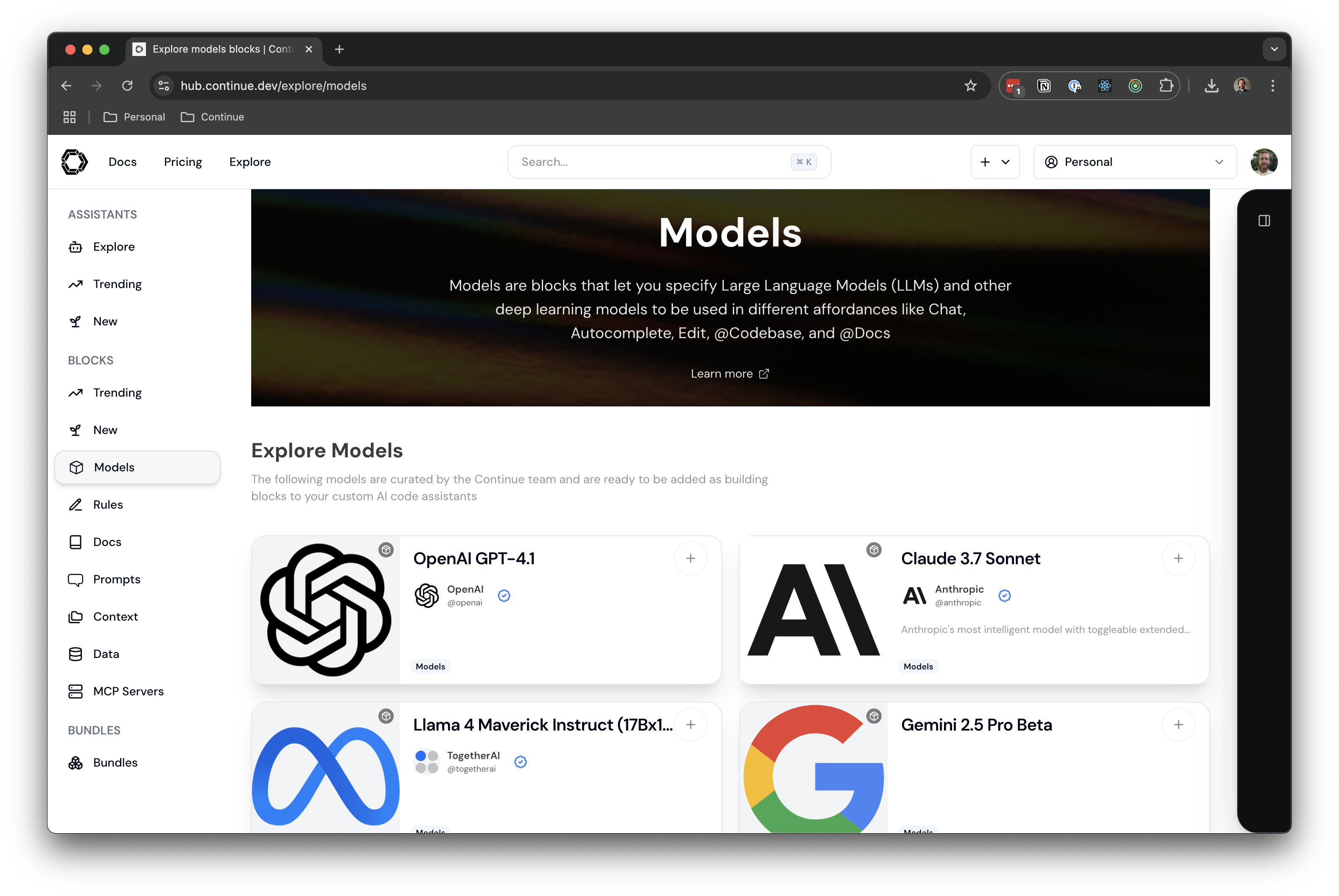

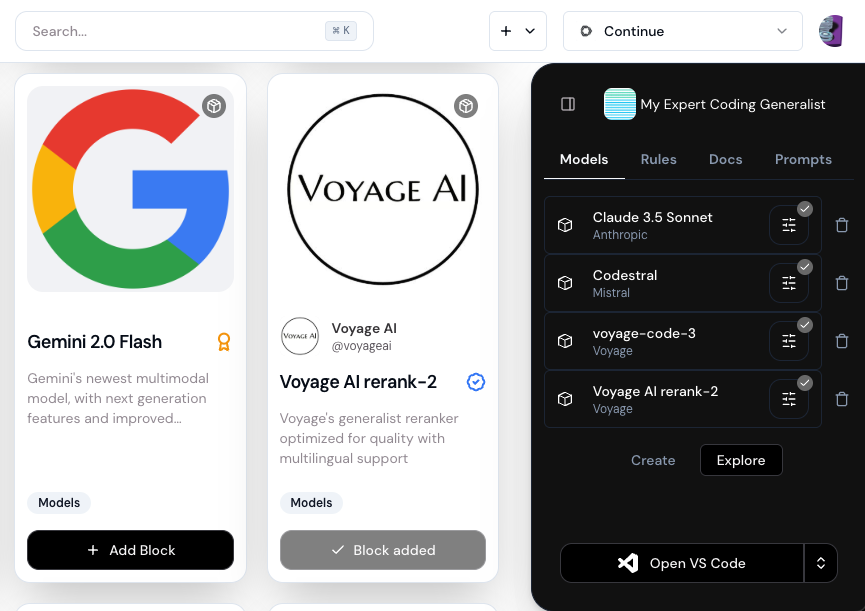

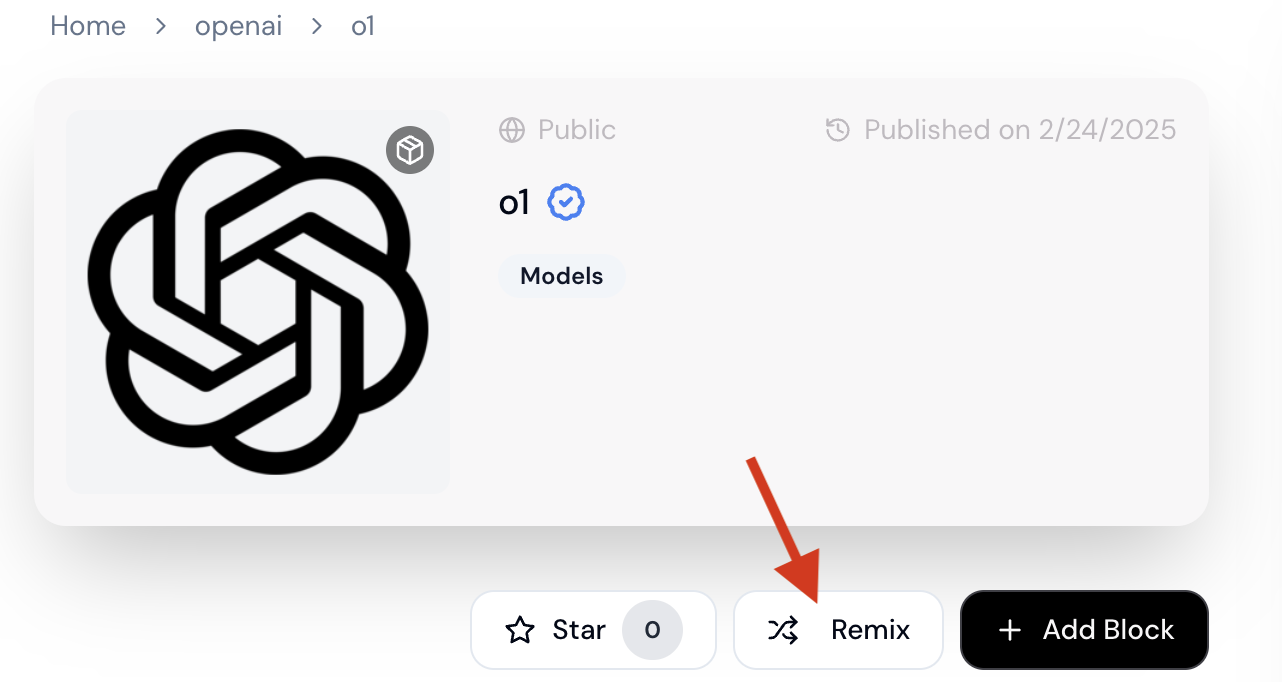

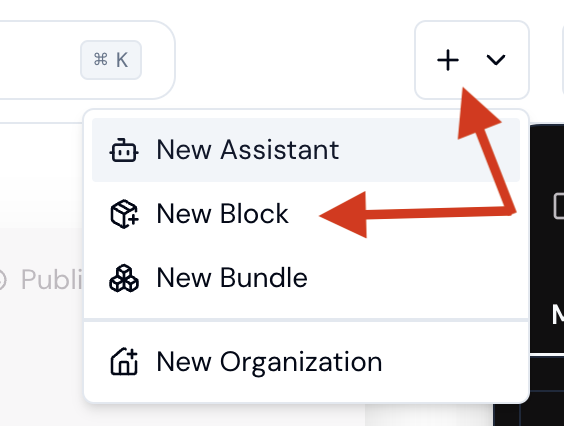

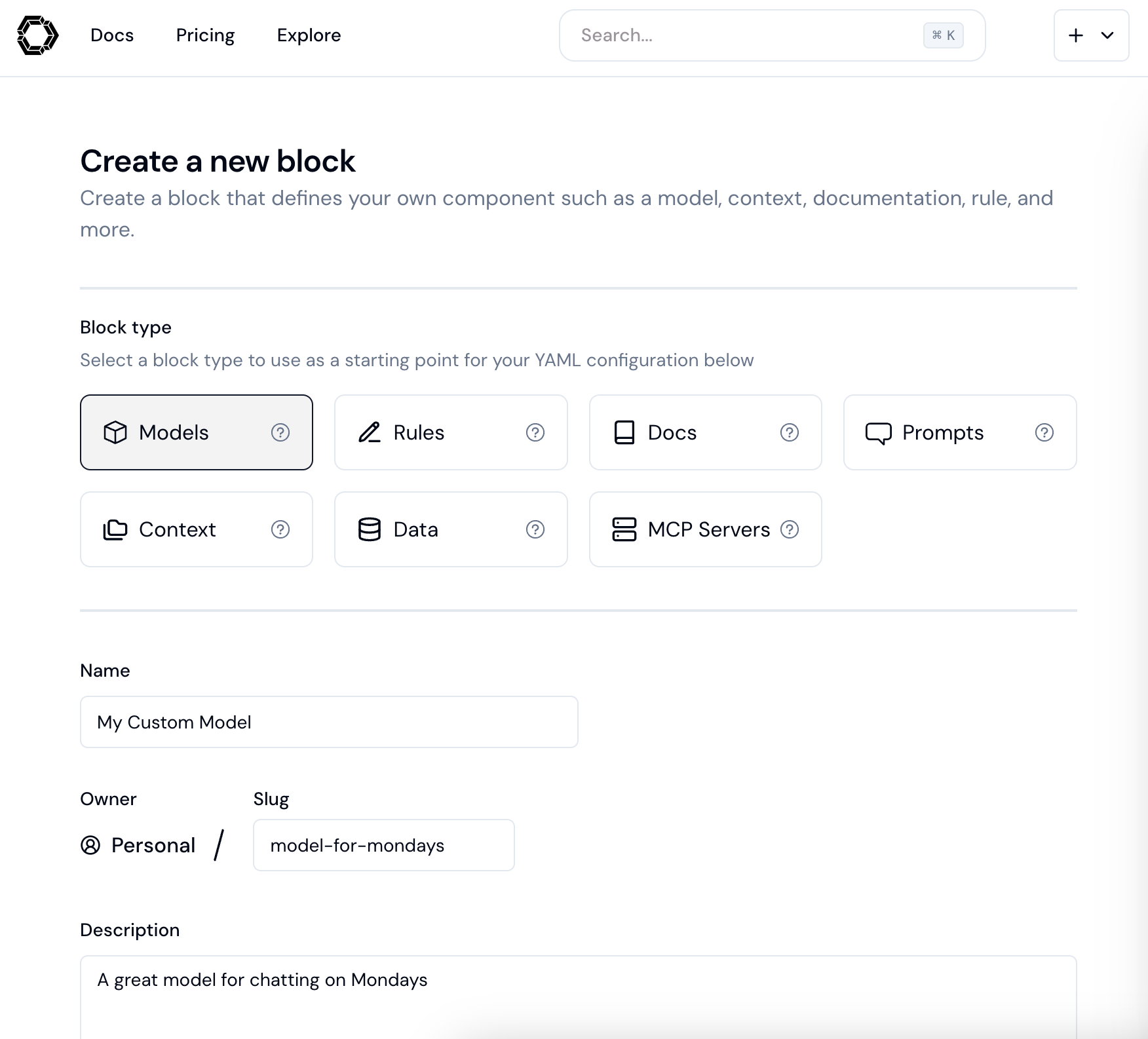

# Model Blocks

Source: https://docs.continue.dev/customization/models

These blocks form the foundation of the entire assistant experience, offering different specialized capabilities:

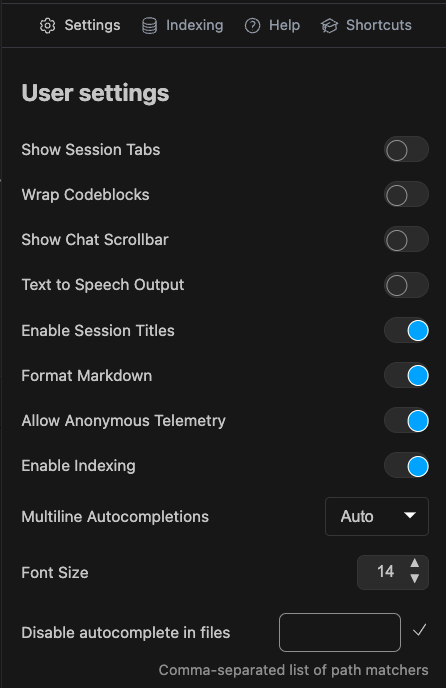

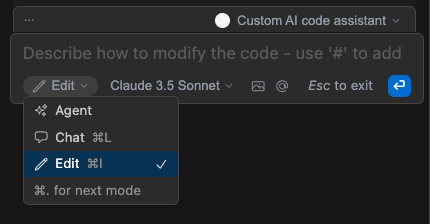

* **[Chat](/customize/model-roles/chat)**: Power conversational interactions about code and provide detailed guidance

* **[Edit](/customize/model-roles/edit)**: Handle complex code transformations and refactoring tasks

* **[Apply](/customize/model-roles/apply)**: Execute targeted code modifications with high accuracy

* **[Autocomplete](/customize/model-roles/autocomplete)**: Provide real-time suggestions as developers type

* **[Embedding](/customize/model-roles/embeddings)**: Transform code into vector representations for semantic search

* **[Reranker](/customize/model-roles/reranking)**: Improve search relevance by ordering results based on semantic meaning

## Learn More

Continue supports [many model providers](/customization/models#openai), including Anthropic, OpenAI, Gemini, Ollama, Amazon Bedrock, Azure, xAI, DeepSeek, and more. Models can have various roles like `chat`, `edit`, `apply`, `autocomplete`, `embed`, and `rerank`.

Read more about roles [here](/customize/model-roles) and view [`models`](/reference#models) in the YAML Reference.

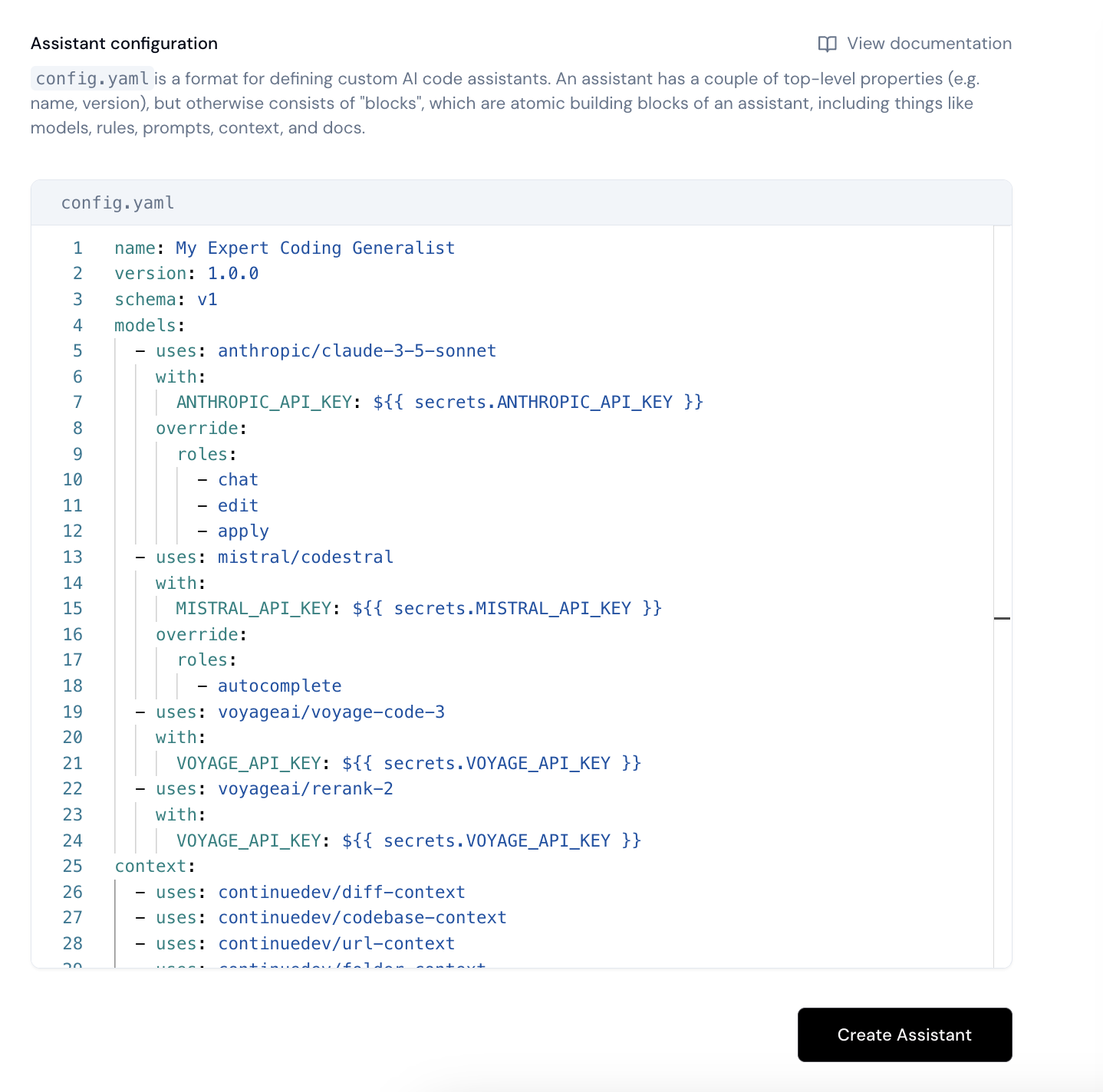

# Overview

Source: https://docs.continue.dev/customization/overview

Continue can be deeply customized. For example you might:

* **Change your Model Provider**. Continue allows you to choose your favorite or even add multiple model providers. This allows you to use different models for different tasks, or to try another model if you're not happy with the results from your current model. Continue supports all of the popular model providers, including OpenAI, Anthropic, Microsoft/Azure, Mistral, and more. You can even self host your own model provider if you'd like. Learn more about [model providers](/customization/models#openai).

* **Select different models for specific tasks**. Different Continue features can use different models. We call these *model roles*. For example, you can use a different model for chat than you do for autocomplete. Learn more about [model roles](/customize/model-roles).

* **Add a Context Provider**. Context providers allow you to add information to your prompts, giving your LLM additional context to work with. Context providers allow you to reference snippets from your codebase, or lookup relevant documentation, or use a search engine to find information and much more. Learn more about [context providers](/customize/custom-providers).

* **Create a Slash Command**. Slash commands allow you to easily add custom functionality to Continue. You can use a slash command that allows you to generate a shell command from natural language, or perhaps generate a commit message, or create your own custom command to do whatever you want. Learn more about [slash commands](/customization/overview#slash-commands).

* **Call external tools and functions**. Unchain your LLM with the power of tools using [Agent](/features/agent/quick-start). Add custom tools using [MCP Servers](/customization/mcp-tools)

Whatever you choose, you'll probably start by editing your Assistant.

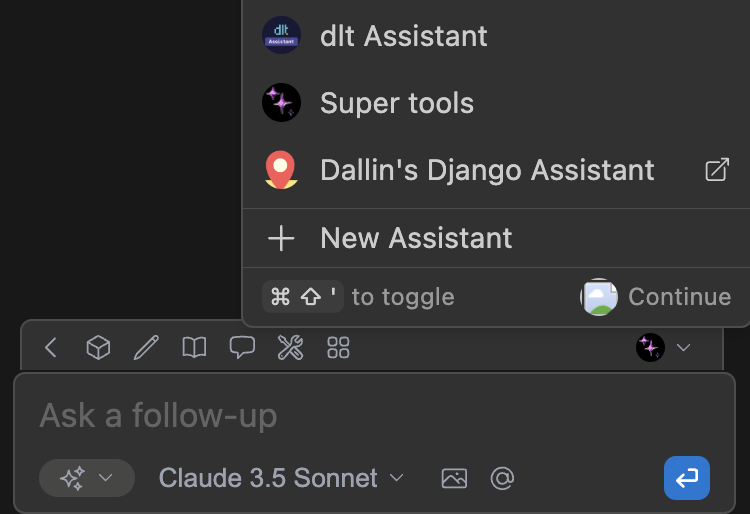

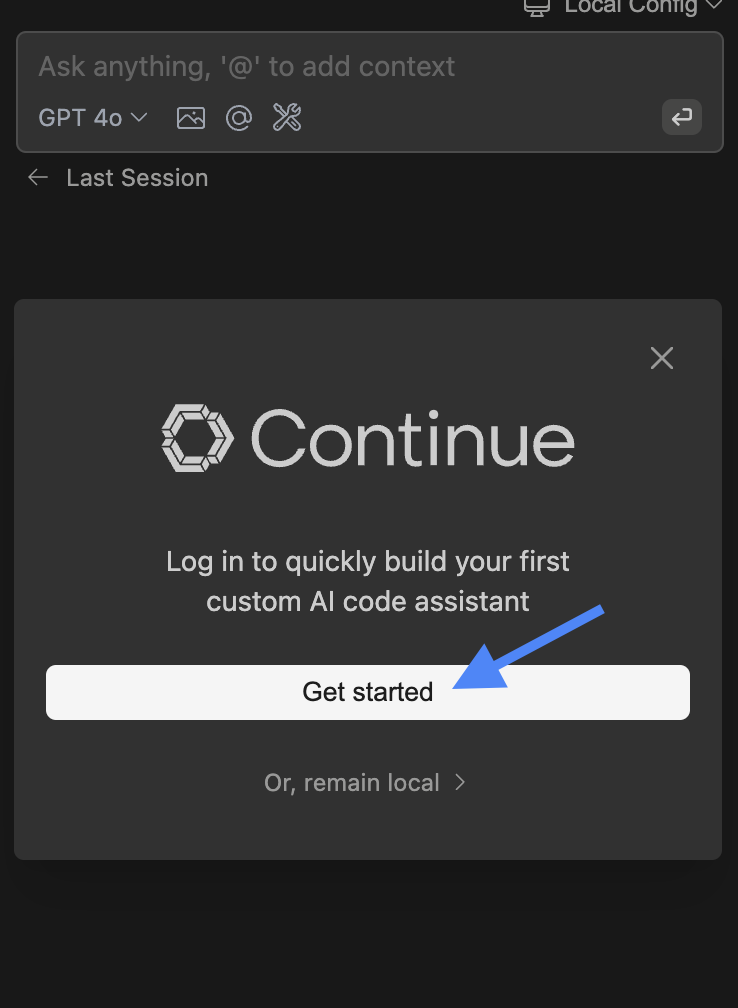

## Editing your assistant

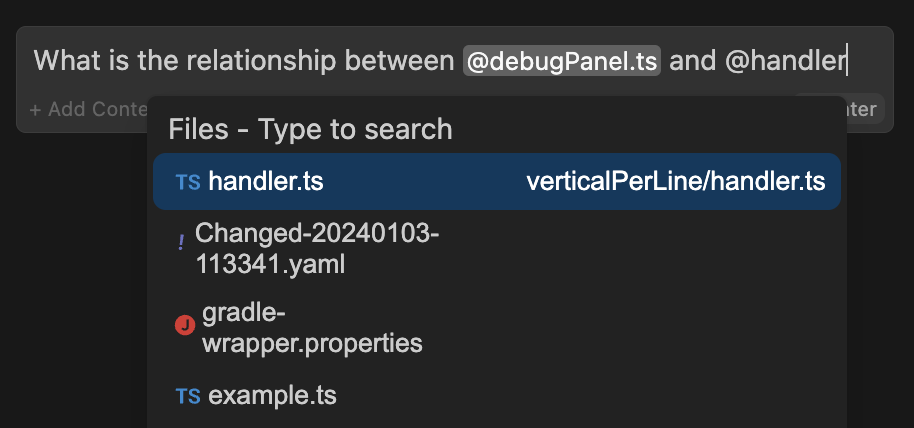

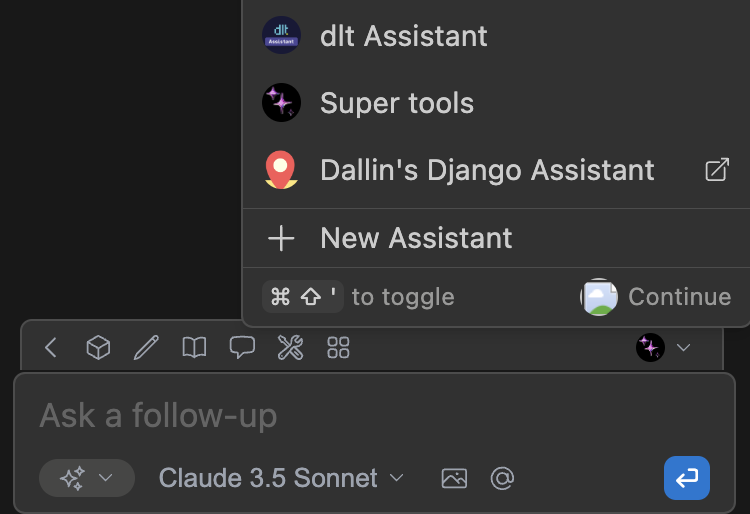

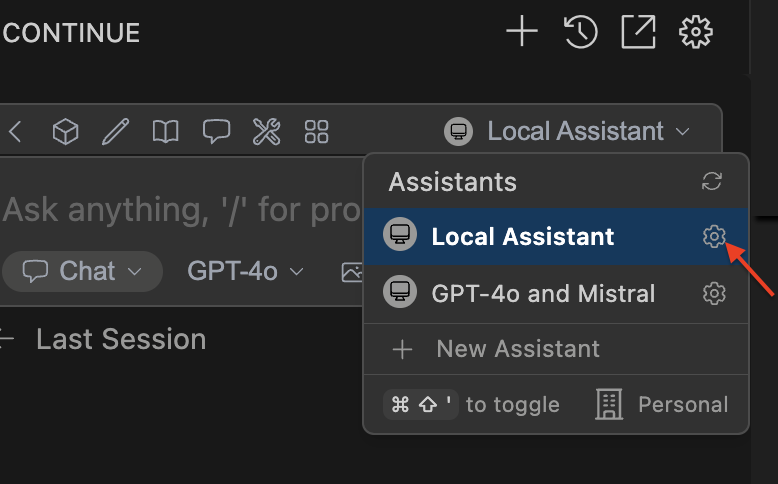

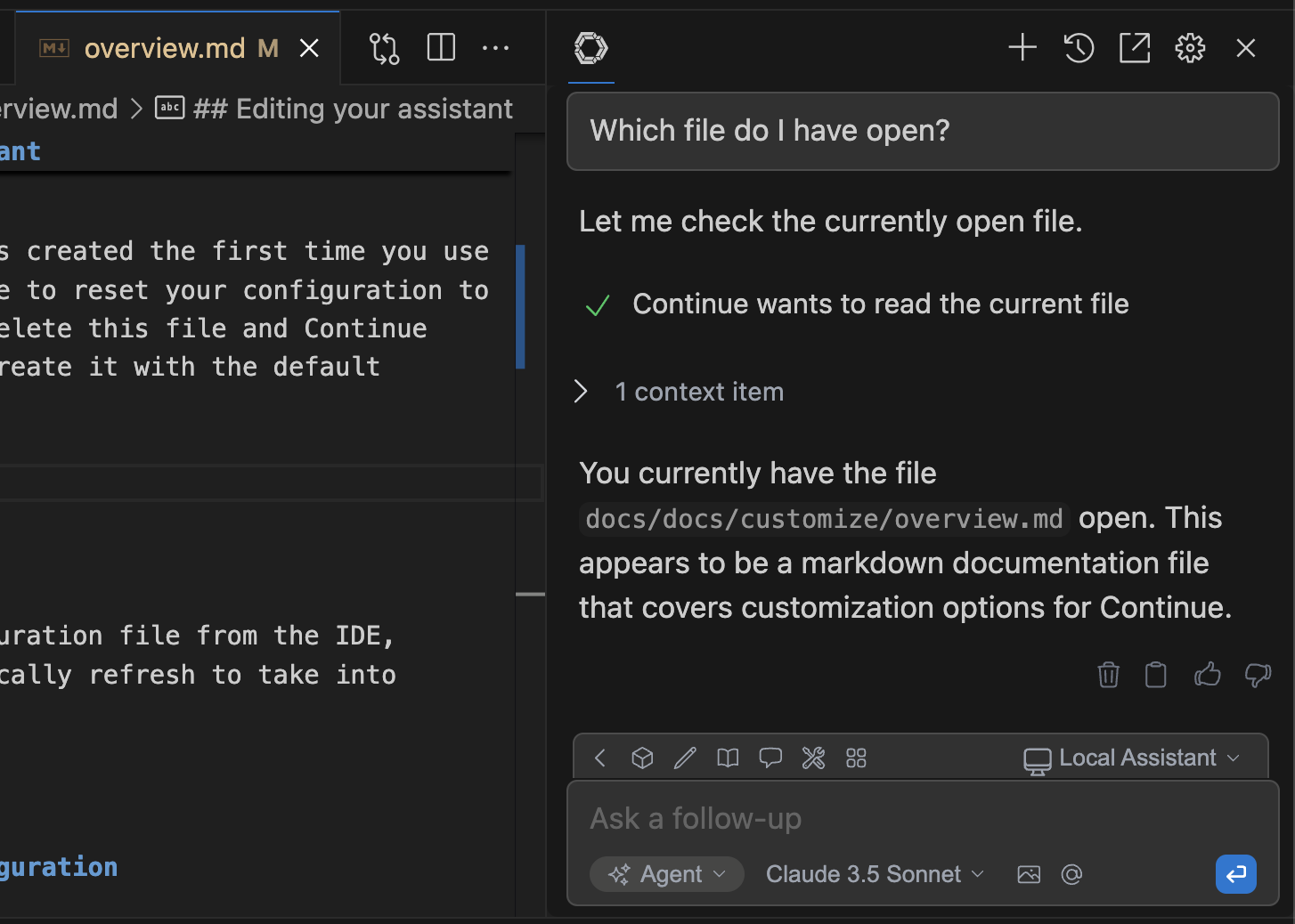

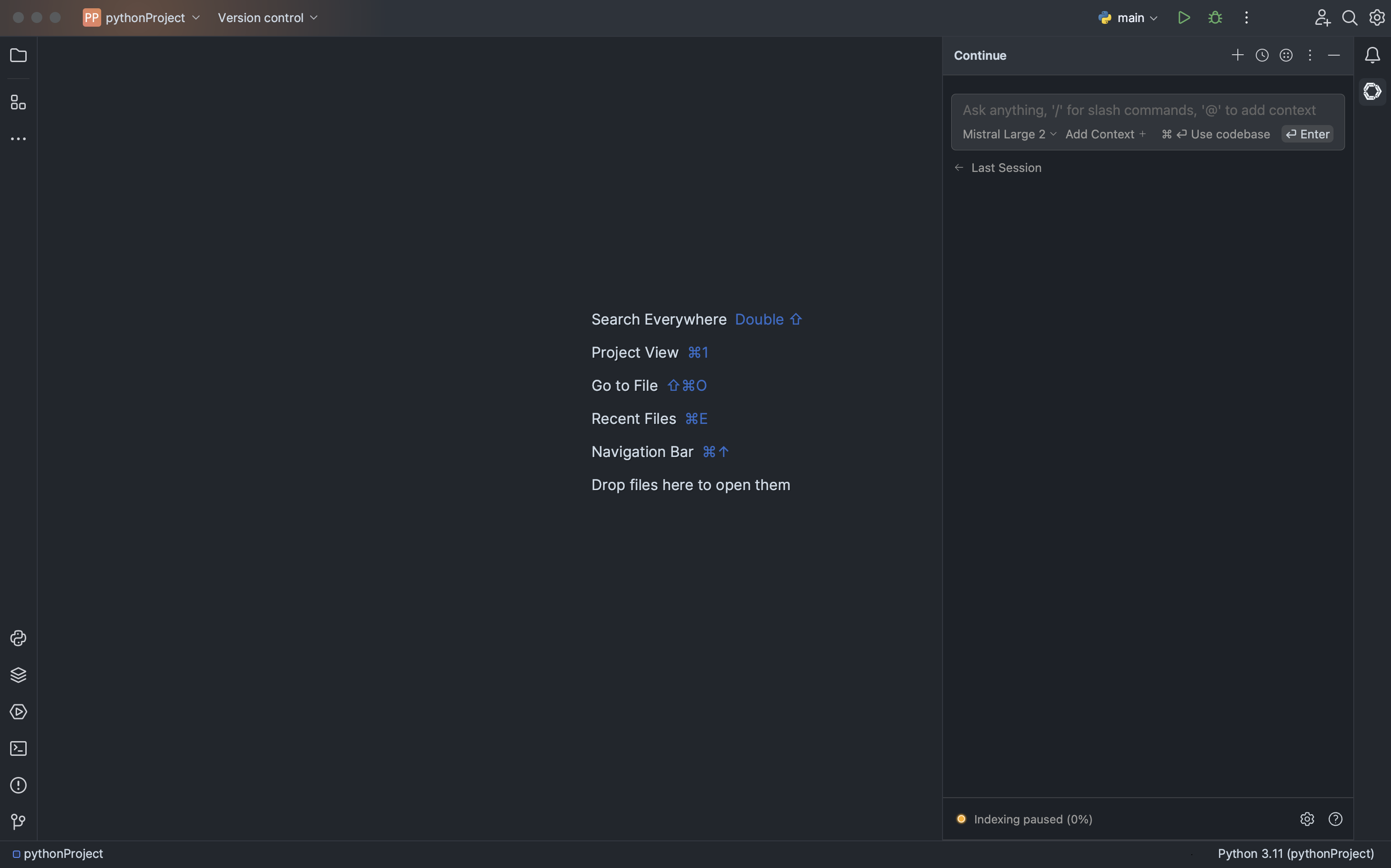

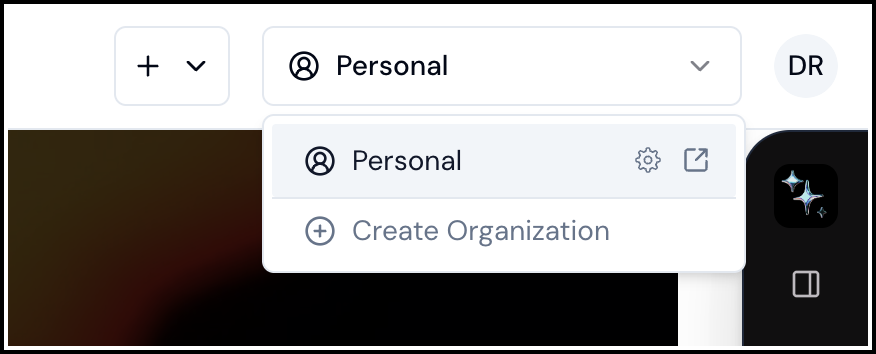

You can easily access your assistant configuration from the Continue Chat sidebar. Open the sidebar by pressing `cmd/ctrl` + `L` (VS Code) or `cmd/ctrl` + `J` (JetBrains) and click the Assistant selector above the main chat input. Then, you can hover over an assistant and click the `new window` (hub assistants) or `gear` (local assistants) icon.

* See [Editing Hub Assistants](/hub/assistants/edit-an-assistant) for more details on managing your hub assistant

* See the [Config Deep Dive](/customization/overview#configuration) for more details on configuring local assistants.

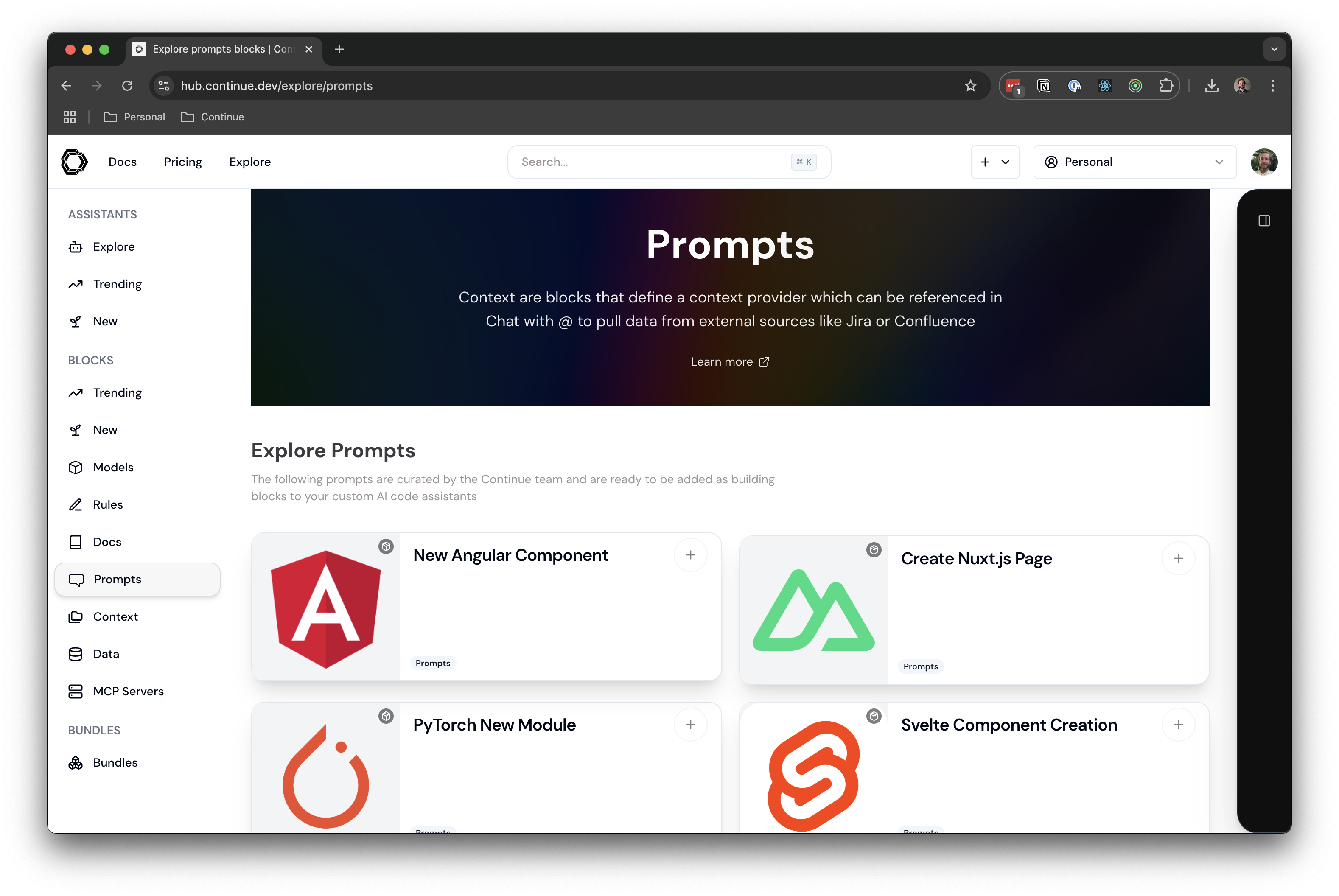

# Prompt Blocks

Source: https://docs.continue.dev/customization/prompts

These are the specialized instructions that shape how models respond:

* **Define interaction patterns** for specific tasks or frameworks

* **Encode domain expertise** for particular technologies

* **Ensure consistent guidance** aligned with organizational practices

* **Can be shared and reused** across multiple assistants

* **Act as automated code reviewers** that ensure consistency across teams

## Learn More

Prompt blocks have the same syntax as [prompt files](/customization/prompts). The `config.yaml` spec for `prompts` can be found [here](/reference#prompts).

# Rules Blocks

Source: https://docs.continue.dev/customization/rules

Rules allow you to provide specific instructions that guide how the AI assistant behaves when working with your code. Instead of the AI making assumptions about your coding standards, architecture patterns, or project-specific requirements, you can explicitly define guidelines that ensure consistent, contextually appropriate responses.

Think of these as the guardrails for your AI coding assistants:

* **Enforce company-specific coding standards** and security practices

* **Implement quality checks** that match your engineering culture

* **Create paved paths** for developers to follow organizational best practices

By implementing rules, you transform the AI from a generic coding assistant into a knowledgeable team member that understands your project's unique requirements and constraints.

### How Rules Work

Your assistant detects rule blocks and applies the specified rules while in [Agent](/features/agent/quick-start), [Chat](/features/chat/quick-start), and [Edit](/features/edit/quick-start) modes.

## Learn More

Learn more in the [rules deep dive](/customization/rules), and view [`rules`](/reference#rules) in the YAML Reference for more details.

# @Codebase

Source: https://docs.continue.dev/customize/context/codebase

Talk to your codebase

Continue indexes your codebase so that it can later automatically pull in the most relevant context from throughout your workspace. This is done via a combination of embeddings-based retrieval and keyword search. By default, all embeddings are calculated locally using `transformers.js` and stored locally in `~/.continue/index`.

**Note:** `transformers.js` cannot be used in JetBrains IDEs. However, you can

select a different embeddings model from [the list

here](../model-roles/embeddings.mdx).

Currently, the codebase retrieval feature is available as the "codebase" and "folder" context providers. You can use them by typing `@Codebase` or `@Folder` in the input box, and then asking a question. The contents of the input box will be compared with the embeddings from the rest of the codebase (or folder) to determine relevant files.

Here are some common use cases where it can be useful:

* Asking high-level questions about your codebase

* "How do I add a new endpoint to the server?"

* "Do we use VS Code's CodeLens feature anywhere?"

* "Is there any code written already to convert HTML to markdown?"

* Generate code using existing samples as reference

* "Generate a new React component with a date picker, using the same patterns as existing components"

* "Write a draft of a CLI application for this project using Python's argparse"

* "Implement the `foo` method in the `bar` class, following the patterns seen in other subclasses of `baz`.

* Use `@Folder` to ask questions about a specific folder, increasing the likelihood of relevant results

* "What is the main purpose of this folder?"

* "How do we use VS Code's CodeLens API?"

* Or any of the above examples, but with `@Folder` instead of `@Codebase`

Here are use cases where it is not useful:

* When you need the LLM to see *literally every* file in your codebase

* "Find everywhere where the `foo` function is called"

* "Review our codebase and find any spelling mistakes"

* Refactoring

* "Add a new parameter to the `bar` function and update usages"

## Configuration

There are a few options that let you configure the behavior of the `@codebase` context provider, which are the same for the `@folder` context provider:

```yaml title="config.yaml"

context:

- provider: codebase

params:

nRetrieve: 25

nFinal: 5

useReranking: true

```

```json title="config.json"

{

"contextProviders": [

{

"name": "codebase",

"params": {

"nRetrieve": 25,

"nFinal": 5,

"useReranking": true

}

}

]

}

```

### `nRetrieve`

Number of results to initially retrieve from vector database (default: 25)

### `nFinal`

Final number of results to use after re-ranking (default: 5)

### `useReranking`

Whether to use re-ranking, which will allow initial selection of `nRetrieve` results, then will use an LLM to select the top `nFinal` results (default: true)

## Ignore files during indexing

Continue respects `.gitignore` files in order to determine which files should not be indexed. If you'd like to exclude additional files, you can add them to a `.continueignore` file, which follows the exact same rules as `.gitignore`.

Continue also supports a **global** `.continueignore` file that will be respected for all workspaces, which can be created at `~/.continue/.continueignore`.

If you want to see exactly what files Continue has indexed, the metadata is stored in `~/.continue/index/index.sqlite`. You can use a tool like [DB Browser for SQLite](https://sqlitebrowser.org/) to view the `tag_catalog` table within this file.

If you need to force a refresh of the index, reload the VS Code window with cmd/ctrl + shift + p + "Reload Window".

## Repository map

Models in the Claude 3, Llama 3.1/3.2, Gemini 1.5, and GPT-4o families will automatically use a [repository map](../custom-providers.mdx#repository-map) during codebase retrieval, which allows the model to understand the structure of your codebase and use it to answer questions. Currently, the repository map only contains the filepaths in the codebase.

# @Docs

Source: https://docs.continue.dev/customize/context/documentation

Learn how to access and search your project's documentation directly within Continue

The [`@Docs` context provider](../custom-providers#docs) allows you to efficiently reference documentation directly within Continue.

## Enabling the `@Docs` context provider

To enable the `@Docs` context provider, add it to the list of context providers in your `config.json` file.

```yaml title="config.yaml"

context:

- provider: docs

```

```json title="config.json"

{

"contextProviders": [

{

"name": "docs"

}

]

}

```

## How It Works

The `@Docs` context provider works by

1. Crawling specified documentation sites

2. Generating embeddings for the chunked content

3. Storing the embeddings locally on your machine

4. Embedding chat input to include similar documentation chunks as context

## Indexing Your Own Documentation

### Hub `docs` Blocks

@Docs uses [`docs` blocks](../../hub/blocks/block-types.md#docs) in Assistants from the hub. Visit the hub to [explore `docs` blocks](https://hub.continue.dev/explore/docs) or [create your own](https://hub.continue.dev/new?type=block\&blockType=docs).

### Through the `@Docs` Context Provider

To add a single documentation site, we recommend using the **Add Documentation** Form within the GUI. This can be accessed

* from the `@Docs` context provider - type `@Docs` in the chat, hit `Enter`, and search for `Add Docs`

* from the `More` page (three dots icon) in the `@docs indexes` section

the `@Docs` context provider.

In the **Add Documentation** Form, enter a `Title` and `Start URL` for the site.

* `Title`: The name of the documentation site, used for identification in the UI.

* `Start URL`: The URL where the indexing process should begin.

Indexing will begin upon submission. Progress can be viewed in the form or later in the `@docs indexes` section of the `More` page.

Documentation sources may be suggested based on package files in your repo. This currently works for Python `requirements.txt` files and Node.js (Javascript/Typescript) `package.json` files.

* Packages with a valid documentation URL (with a `+` icon) can be clicked to immediately kick off indexing

* Packages with partial information (with a pen icon) can be clicked to fill the form with the available information

* Note that you can hover over the information icon to see where the package suggestion was found.

### In a configuration file

For bulk documentation site adds or edits, we recommend editing your global configuration file directly. Documentation sites are stored in an array within `docs` in your global configuration, as follows:

```yaml title="config.yaml"

docs:

- title: Nest.js

startUrl: https://docs.nestjs.com/

faviconUrl: https://docs.nestjs.com/favicon.ico

```

```json title="config.json"

{

"docs": [

{

"title": "Nest.js",

"startUrl": "https://docs.nestjs.com/",

"faviconUrl": "https://docs.nestjs.com/favicon.ico"

}

]

}

```

See [the config reference](/reference) for all documentation site configuration options.

Indexing will re-sync upon saving the configuration file.

## Configuration

### Using Your Embeddings Provider

If you have set up an [embeddings provider](../model-roles/embeddings.mdx), @docs will use your embeddings provider. Switching embeddings providers will trigger a re-index of all documentation sites in your configuration.

### Reranking

As with [@Codebase context provider configuration](./codebase#configuration), you can adjust the reranking behavior of the `@Docs` context provider with the `nRetrieve`, `nFinal`, and `useReranking`.

```yaml title="config.yaml"

context:

- provider: docs

params:

nRetrieve: 25 # The number of docs to retrieve from the embeddings query

nFinal: 5 # The number of docs chunks to return IF reranking

useReranking: true # use reranking if a reranker is configured (defaults to true)

```

```json title="config.json"

{

"contextProviders": [

{

"name": "docs",

"params": {

"nRetrieve": 25, // The number of docs to retrieve from the embeddings query

"nFinal": 5, // The number of docs chunks to return IF reranking

"useReranking": true // use reranking if a reranker is configured (defaults to true)

}

}

]

}

```

### GitHub

The GitHub API rate limits public requests to 60 per hour. If you want to reliably index GitHub repos, you can add a github token to your config file:

```yaml title="config.yaml"

context:

- provider: docs

params:

githubToken:

```

```json title="config.json"

{

"contextProviders": [

{

"name": "docs",

"params": {

"githubToken": "github_..."

}

}

]

}

```

### Local Crawling

By default, Continue crawls documentation sites using a specialized crawling service that provides the best experience for most users and documentation sites.

If your documentation is private, you can skip the default crawler and use a local crawler instead by setting `useLocalCrawling` to `true`.

```yaml title="config.yaml"

docs:

- title: My Private Docs

startUrl: http://10.2.1.2/docs

faviconUrl: http://10.2.1.2/docs/assets/favicon.ico,

useLocalCrawling: true

```

```json title="config.json"

{

"docs": [

{

"title": "My Private Docs",

"startUrl": "http://10.2.1.2/docs",

"faviconUrl": "http://10.2.1.2/docs/assets/favicon.ico",

"useLocalCrawling": true

}

]

}

```

Chromium crawling has been deprecated

{/* The default local crawler is a lightweight tool that cannot render sites that are dynamically generated using JavaScript. If your sites need to be rendered, you can enable the experimental `Use Chromium for Docs Crawling` feature from your [User Settings Page](../deep-dives/settings.md) This will download and install Chromium to `~/.continue/.utils`, and use it as the local crawler. */}

Further notes:

* If the site is only locally accessible, the default crawler will fail anyways and fall back to the local crawler. `useLocalCrawling` is especially useful if the URL itself is confidential.

* For GitHub Repos this has no effect because only the GitHub Crawler will be used, and if the repo is private it can only be accessed with a priveleged GitHub token anyways.

## Managing your docs indexes

You can view indexing statuses and manage your documentation sites from the `@docs indexes` section of the `More` page (three dots)

* Continue does not automatically re-index your docs. Use `Click to re-index` to trigger a reindex for a specific source

* While a site is indexing, click `Cancel indexing` to cancel the process

* Failed indexing attempts will show an error status bar and icon

* Delete a documentation site from your configuration using the trash icon

You can also view the overall status of currently indexing docs from a hideable progress bar at the bottom of the chat page

You can also use the following IDE command to force a re-index of all docs: `Continue: Docs Force Re-Index`.

## Examples

### VS Code minimal setup

The following configuration example works out of the box for VS Code. This uses the built-in embeddings provider with no reranking.

```yaml title="config.yaml"

context:

- provider: docs

docs:

- title: Nest.js

startUrl: https://docs.nestjs.com/

```

```json title="config.json"

{

"contextProviders": [

{

"name": "docs",

}

],

"docs": [

{

"title": "Nest.js",

"startUrl": "https://docs.nestjs.com/",

},

]

}

```

### Jetbrains minimal setup

Here is the equivalent minimal example for Jetbrains, which requires setting up an [embeddings provider](../model-roles/embeddings.mdx).

```yaml title="config.yaml"

models:

- name: LMStudio embedder

provider: lmstudio

model: nomic-ai/nomic-embed-text-v1.5-GGUF

roles:

- embed

context:

- provider: docs

docs:

- title: Nest.js

startUrl: https://docs.nestjs.com/

```

```json title="config.json"

{

"contextProviders": [

{

"name": "docs",

}

],

"docs": [

{

"title": "Nest.js",

"startUrl": "https://docs.nestjs.com/",

},

],

"embeddingsProvider": {

"provider": "lmstudio",

"model": "nomic-ai/nomic-embed-text-v1.5-GGUF"

}

}

```

### Full-power setup (VS Code or Jetbrains)

The following configuration example includes:

* Examples of both public and private documentation sources

* A custom embeddings provider

* A reranker model available, with reranking parameters customized

* A GitHub token to enable GitHub crawling

```yaml title="config.yaml"

models:

- name: LMStudio Nomic Text

provider: lmstudio

model: nomic-ai/nomic-embed-text-v1.5-GGUF

roles:

- embed

- name: Voyage Rerank-2

provider: voyage

apiKey:

model: rerank-2

roles:

- rerank

context:

- provider: docs

params:

githubToken:

nRetrieve: 25

nFinal: 5

useReranking: true

docs:

- title: Nest.js

startUrl: https://docs.nestjs.com/

- title: My Private Docs

startUrl: http://10.2.1.2/docs

faviconUrl: http://10.2.1.2/docs/assets/favicon.ico

maxDepth: 4

useLocalCrawling: true

```

```json title="config.json"

{

"contextProviders": [

{

"name": "docs",

"params": {

"githubToken": "github_...",

"nRetrieve": 25,

"nFinal": 5,

"useReranking": true

}

}

],

"docs": [

{

"title": "Nest.js",

"startUrl": "https://docs.nestjs.com/"

},

{

"title": "My Private Docs",

"startUrl": "http://10.2.1.2/docs",

"faviconUrl": "http://10.2.1.2/docs/assets/favicon.ico",

"maxDepth": 4,

"useLocalCrawling": true

}

],

"reranker": {

"name": "voyage",

"params": {

"model": "rerank-2",

"apiKey": ""

}

},

"embeddingsProvider": {

"provider": "lmstudio",

"model": "nomic-ai/nomic-embed-text-v1.5-GGUF"

}

}

```

{/* This could also involve enabling Chromium as a backup for local documentation the [User Settings Page](../deep-dives/settings.md). */}

# Context Providers

Source: https://docs.continue.dev/customize/custom-providers

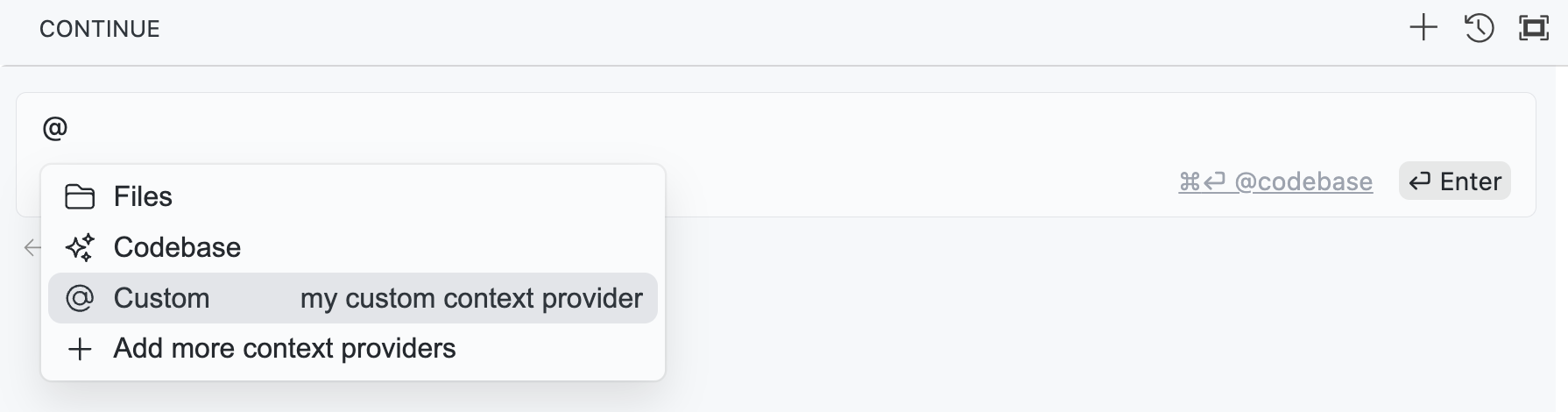

Context Providers allow you to type '@' and see a dropdown of content that can all be fed to the LLM as context. Every context provider is a plugin, which means if you want to reference some source of information that you don't see here, you can request (or build!) a new context provider.

As an example, say you are working on solving a new GitHub Issue. You type '@Issue' and select the one you are working on. Continue can now see the issue title and contents. You also know that the issue is related to the files 'readme.md' and 'helloNested.py', so you type '@readme' and '@hello' to find and select them. Now these 3 "Context Items" are displayed inline with the rest of your input.

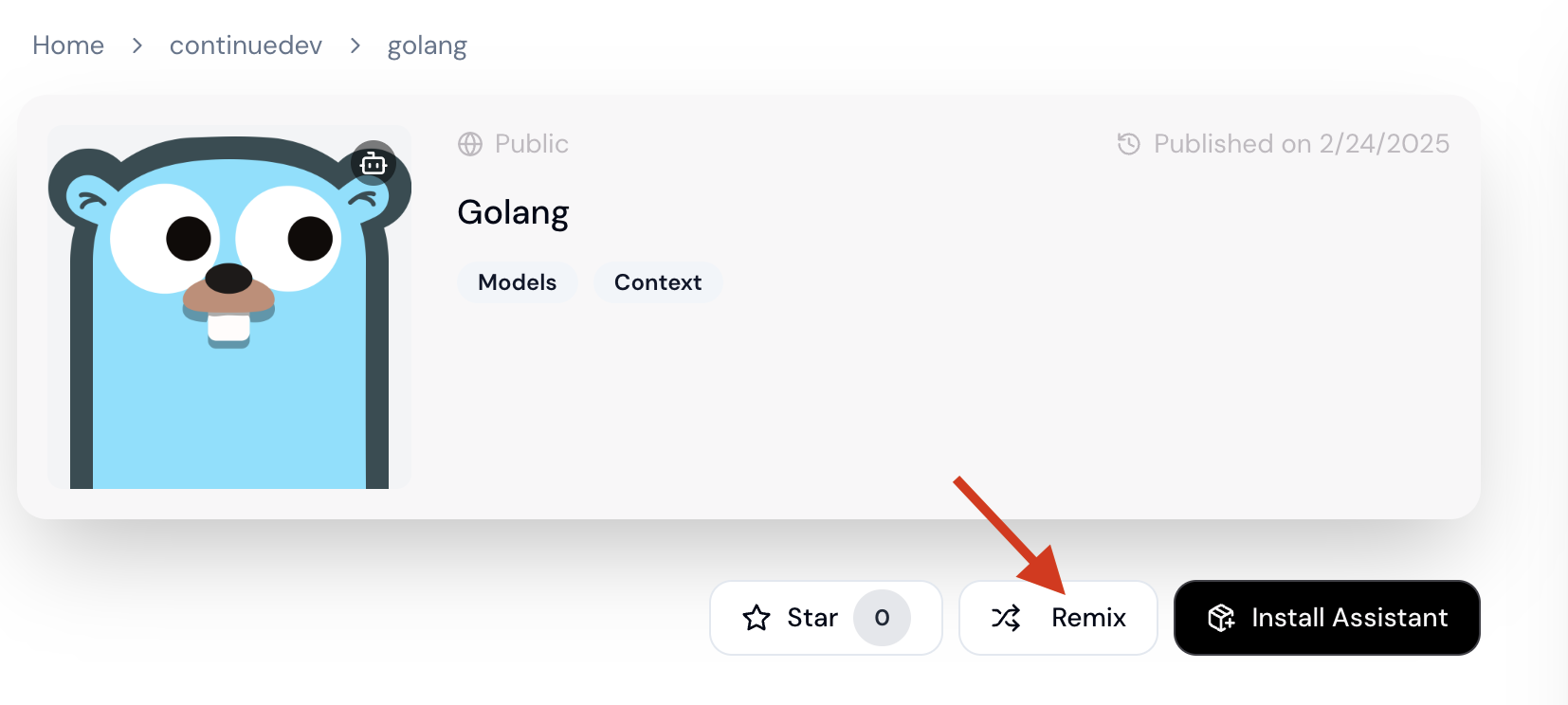

## Context blocks

You can add context providers to assistants using [`context` blocks](/hub/blocks/block-types#context). Explore available context blocks in [the hub](https://hub.continue.dev/explore/context).

## Built-in Context Providers

You can add any built-in context-providers in your config file as shown below:

### `@File`

Reference any file in your current workspace.

* YAML

* JSON

config.yaml

```

context: - provider: file

```

config.json

```json

{ "contextProviders": [{ "name": "file" }] }

```

### `@Code`

Reference specific functions or classes from throughout your project.

* YAML

* JSON

config.yaml

```

context: - provider: code

```

config.json

```json

{ "contextProviders": [{ "name": "code" }] }

```

### `@Git Diff`

Reference all of the changes you've made to your current branch. This is useful if you want to summarize what you've done or ask for a general review of your work before committing.

* YAML

* JSON

config.yaml

```

context: - provider: diff

```

config.json

```json

{ "contextProviders": [{ "name": "diff" }] }

```

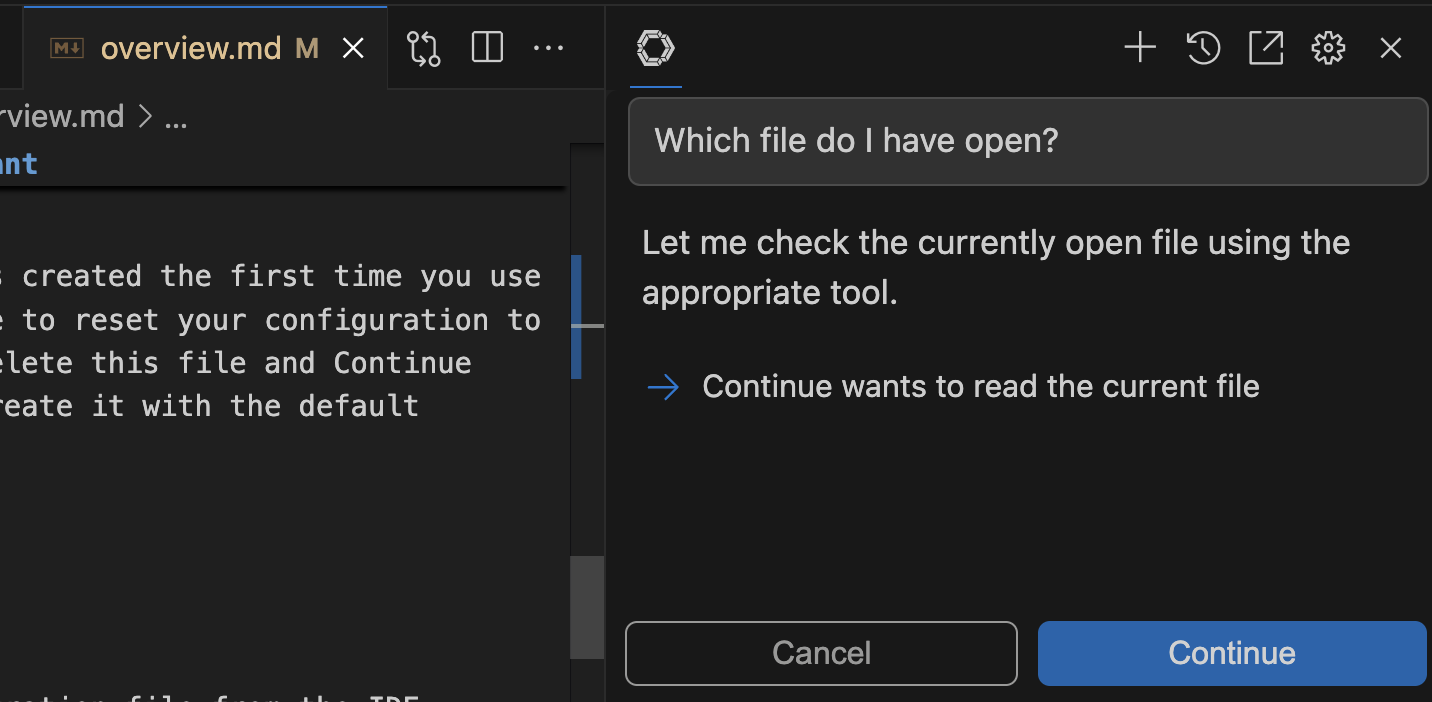

### `@Current File`

Reference the currently open file.

* YAML

* JSON

config.yaml

```

context: - provider: currentFile

```

config.json

```json

{ "contextProviders": [{ "name": "currentFile" }] }

```

### `@Terminal`

Reference the last command you ran in your IDE's terminal and its output.

* YAML

* JSON

config.yaml

```

context: - provider: terminal

```

config.json

```json

{ "contextProviders": [{ "name": "terminal" }] }

```

### `@Docs`

Reference the contents from any documentation site.

* YAML

* JSON

config.yaml

```

context: - provider: docs

```

config.json

```json

{ "contextProviders": [{ "name": "docs" }] }

```

Note that this will only enable the `@Docs` context provider.

To use it, you need to add a documentation site to your config file. See the [docs](/customization/overview#documentation-context) page for more information.

### `@Open`

Reference the contents of all of your open files. Set `onlyPinned` to `true` to only reference pinned files.

* YAML

* JSON

config.yaml

```

context: - provider: open params: onlyPinned: true

```

config.json

```json

{ "contextProviders": [{ "name": "open", "params": { "onlyPinned": true } }] }

```

### `@Web`

Reference relevant pages from across the web, automatically determined from your input.

Optionally, set `n` to limit the number of results returned (default 6).

* YAML

* JSON

config.yaml

```

context: - provider: web params: n: 5

```

config.json

```json

{ "contextProviders": [{ "name": "web", "params": { "n": 5 } }] }

```

### `@Codebase`

Reference the most relevant snippets from your codebase.

* YAML

* JSON

config.yaml

```

context: - provider: codebase

```

config.json

```json

{ "contextProviders": [{ "name": "codebase" }] }

```

Read more about indexing and retrieval [here](/customization/overview#codebase-context).

### `@Folder`

Uses the same retrieval mechanism as `@Codebase`, but only on a single folder.

* YAML

* JSON

config.yaml

```

context: - provider: folder

```

config.json

```json

{ "contextProviders": [{ "name": "folder" }] }

```

### `@Search`

Reference the results of codebase search, just like the results you would get from VS Code search.

* YAML

* JSON

config.yaml

```

context: - provider: search params: maxResults: 100 # optional, defaults to 200

```

config.json

```json

{ "contextProviders": [ { "name": "search", "params": { "maxResults": 100 // optional, defaults to 200 } } ]}

```

This context provider is powered by [ripgrep](https://github.com/BurntSushi/ripgrep).

### `@Url`

Reference the markdown converted contents of a given URL.

* YAML

* JSON

config.yaml

```

context: - provider: url

```

config.json

```json

{ "contextProviders": [{ "name": "url" }] }

```

### `@Clipboard`

Reference recent clipboard items

* YAML

* JSON

config.yaml

```

context: - provider: clipboard

```

config.json

```json

{ "contextProviders": [{ "name": "clipboard" }] }

```

### `@Tree`

Reference the structure of your current workspace.

* YAML

* JSON

config.yaml

```

context: - provider: tree

```

config.json

```json

{ "contextProviders": [{ "name": "tree" }] }

```

### `@Problems`

Get Problems from the current file.

* YAML

* JSON

config.yaml

```

context: - provider: problems

```

config.json

```json

{ "contextProviders": [{ "name": "problems" }] }

```

### `@Debugger`

Reference the contents of the local variables in the debugger. Currently only available in VS Code.

* YAML

* JSON

config.yaml

```

context: - provider: debugger params: stackDepth: 3

```

config.json

```json

{ "contextProviders": [{ "name": "debugger", "params": { "stackDepth": 3 } }] }

```

Uses the top *n* levels (defaulting to 3) of the call stack for that thread.

### `@Repository Map`

Reference the outline of your codebase. By default, signatures are included along with file in the repo map.

`includeSignatures` params can be set to false to exclude signatures. This could be necessary for large codebases and/or to reduce context size significantly. Signatures will not be included if indexing is disabled.

* YAML

* JSON

config.yaml

```

context: - provider: repo-map params: includeSignatures: false # default true

```

config.json

```json

{ "contextProviders": [ { "name": "repo-map", "params": { "includeSignatures": false // default true } } ]}

```

Provides a list of files and the call signatures of top-level classes, functions, and methods in those files. This helps the model better understand how a particular piece of code relates to the rest of the codebase.

In the submenu that appears, you can select either `Entire codebase`, or specify a subfolder to generate the repostiory map from.

This context provider is inpsired by [Aider's repository map](https://aider.chat/2023/10/22/repomap.html).

### `@Operating System`

Reference the architecture and platform of your current operating system.

* YAML

* JSON

config.yaml

```

context: - provider: os

```

config.json

```json

{ "contextProviders": [{ "name": "os" }] }

```

### Model Context Protocol

The [Model Context Protocol](https://modelcontextprotocol.io/introduction) is a standard proposed by Anthropic to unify prompts, context, and tool use. Continue supports any MCP server with the MCP context provider. Read their [quickstart](https://modelcontextprotocol.io/quickstart) to learn how to set up a local server and then set up your configuration like this:

* YAML

* JSON

config.yaml

```

mcpServers: - name: My MCP Server command: uvx args: - mcp-server-sqlite - --db-path - /Users/NAME/test.db

```

config.json

```json

{

"experimental": {

"modelContextProtocolServers": [

{

"transport": {

"type": "stdio",

"command": "uvx",

"args": ["mcp-server-sqlite", "--db-path", "/Users/NAME/test.db"]

}

}

]

}

}

```

You'll then be able to type "@" and see "MCP" in the context providers dropdown.

### `@Issue`

Reference the conversation in a GitHub issue.

* YAML

* JSON

config.yaml

```

context: - provider: issue params: repos: - owner: continuedev repo: continue githubToken: ghp_xxx

```

config.json

```json

{

"contextProviders": [

{

"name": "issue",

"params": {

"repos": [{ "owner": "continuedev", "repo": "continue" }],

"githubToken": "ghp_xxx"

}

}

]

}

```

Make sure to include your own [GitHub personal access token](https://docs.github.com/en/authentication/keeping-your-account-and-data-secure/managing-your-personal-access-tokens#creating-a-fine-grained-personal-access-token) to avoid being rate-limited.

### `@Database`

Reference table schemas from Sqlite, Postgres, MSSQL, and MySQL databases.

* YAML

* JSON

config.yaml

```

context: - provider: database params: connections: - name: examplePostgres connection_type: postgres connection: user: username host: localhost database: exampleDB password: yourPassword port: 5432 - name: exampleMssql connection_type: mssql connection: user: username server: localhost database: exampleDB password: yourPassword - name: exampleSqlite connection_type: sqlite connection: filename: /path/to/your/sqlite/database.db

```

config.json

```json

{

"contextProviders": [

{

"name": "database",

"params": {

"connections": [

{

"name": "examplePostgres",

"connection_type": "postgres",

"connection": {

"user": "username",

"host": "localhost",

"database": "exampleDB",

"password": "yourPassword",

"port": 5432

}

},

{

"name": "exampleMssql",

"connection_type": "mssql",

"connection": {

"user": "username",

"server": "localhost",

"database": "exampleDB",

"password": "yourPassword"

}

},

{

"name": "exampleSqlite",

"connection_type": "sqlite",

"connection": { "filename": "/path/to/your/sqlite/database.db" }

}

]

}

}

]

}

```

Each connection should include a unique name, the `connection_type`, and the necessary connection parameters specific to each database type.

Available connection types:

* `postgres`

* `mysql`

* `sqlite`

### `@Postgres`

Reference the schema of a table, and some sample rows

* YAML

* JSON

config.yaml

```

context: - provider: postgres params: host: localhost port: 5436 user: myuser password: catsarecool database: animals schema: public sampleRows: 3

```

config.json

```json

{

"contextProviders": [

{

"name": "postgres",

"params": {

"host": "localhost",

"port": 5436,

"user": "myuser",

"password": "catsarecool",

"database": "animals",

"schema": "public",

"sampleRows": 3

}

}

]

}

```

The only required settings are those for creating the database connection: `host`, `port`, `user`, `password`, and `database`.

By default, the `schema` filter is set to `public`, and the `sampleRows` is set to 3. You may unset the schema if you want to include tables from all schemas.

[Here is a short demo.](https://github.com/continuedev/continue/pull/859)

### `@Google`

Reference the results of a Google search.

* YAML

* JSON

config.yaml

```

context: - provider: google params: serperApiKey:

```

config.json

```json

{

"contextProviders": [

{

"name": "google",

"params": { "serperApiKey": "" }

}

]

}

```

For example, type "@Google python tutorial" if you want to search and discuss ways of learning Python.

Note: You can get an API key for free at [serper.dev](https://serper.dev).

### `@Gitlab Merge Request`

Reference an open MR for this branch on GitLab.

* YAML

* JSON

config.yaml

```

context: - provider: gitlab-mr params: token: "..."

```

config.json

```json

{ "contextProviders": [{ "name": "gitlab-mr", "params": { "token": "..." } }] }

```

You will need to create a [personal access token](https://docs.gitlab.com/ee/user/profile/personal_access_tokens.html) with the `read_api` scope.

#### Using Self-Hosted GitLab

You can specify the domain to communicate with by setting the `domain` parameter in your configurtion. By default this is set to `gitlab.com`.

* YAML

* JSON

config.yaml

```

context: - provider: gitlab-mr params: token: "..." domain: "gitlab.example.com"

```

config.json

```json

{

"contextProviders": [

{

"name": "gitlab-mr",

"params": { "token": "...", "domain": "gitlab.example.com" }

}

]

}

```

#### Filtering Comments

If you select some code to be edited, you can have the context provider filter out comments for other files. To enable this feature, set `filterComments` to `true`.

### `@Jira`

Reference the conversation in a Jira issue.

* YAML

* JSON

config.yaml

```

context: - provider: jira params: domain: company.atlassian.net token: ATATT...

```

config.json

```json

{

"contextProviders": [

{

"name": "jira",

"params": { "domain": "company.atlassian.net", "token": "ATATT..." }

}

]

}

```

Make sure to include your own [Atlassian API Token](https://id.atlassian.com/manage-profile/security/api-tokens), or use your `email` and `token`, with token set to your password for basic authentication. If you use your own Atlassian API Token, don't configure your email.

#### Jira Datacenter Support

This context provider supports both Jira API version 2 and 3. It will use version 3 by default since that's what the cloud version uses, but if you have the datacenter version of Jira, you'll need to set the API Version to 2 using the `apiVersion` property.

* YAML

* JSON

config.yaml

```

context: - provider: jira params: apiVersion: "2"

```

config.json

```json

{ "contextProviders": [{ "name": "jira", "params": { "apiVersion": "2" } }] }

```

#### Issue Query

By default, the following query will be used to find issues:

```

assignee = currentUser() AND resolution = Unresolved order by updated DESC

```

You can override this query by setting the `issueQuery` parameter.

#### Max results

You can set the `maxResults` parameter to limit the number of results returned. The default is `50`.

### `@Discord`

Reference the messages in a Discord channel.

* YAML

* JSON

config.yaml

```

context: - provider: discord params: discordKey: "bot token" guildId: "1234567890" channels: - id: "123456" name: "example-channel" - id: "678901" name: "example-channel-2"

```

config.json

```json

{

"contextProviders": [

{

"name": "discord",

"params": {

"discordKey": "bot token",

"guildId": "1234567890",

"channels": [

{ "id": "123456", "name": "example-channel" },

{ "id": "678901", "name": "example-channel-2" }

]

}

}

]

}

```

Make sure to include your own [Bot Token](https://discord.com/developers/applications), and join it to your related server . If you want more granular control over which channels are searched, you can specify a list of channel IDs to search in. If you don't want to specify any channels, just include the guild id(Server ID) and all channels will be included. The provider only reads text channels.

### `@HTTP`

The HttpContextProvider makes a POST request to the url passed in the configuration. The server must return 200 OK with a ContextItem object or an array of ContextItems.

* YAML

* JSON

config.yaml

```

context: - provider: http params: url: "https://api.example.com/v1/users"

```

config.json

```json

{

"contextProviders": [

{ "name": "http", "params": { "url": "https://api.example.com/v1/users" } }

]

}

```

The receiving URL should expect to receive the following parameters:

POST parameters

```json

{ query: string, fullInput: string}

```

The response 200 OK should be a JSON object with the following structure:

Response

```

[ { "name": "", "description": "", "content": "" }]// OR{ "name": "", "description": "", "content": ""}

```

### `@Commits`

Reference specific git commit metadata and diff or all of the recent commits.

* YAML

* JSON

config.yaml

```

context: - provider: commit params: Depth: 50 LastXCommitsDepth: 10

```

config.json

```json

{

"contextProviders": [

{ "name": "commit", "params": { "Depth": 50, "LastXCommitsDepth": 10 } }

]

}

```

The depth is how many commits will be loaded into the submenu, defaults to 50. The LastXCommitsDepth is how many recent commits will be included, defaults to 10.

### `@Greptile`

Query a [Greptile](https://www.greptile.com/) index of the current repo/branch.

* YAML

* JSON

config.yaml

```

context: - provider: greptile params: greptileToken: "..." githubToken: "..."

```

config.json

```json

{

"contextProviders": [

{

"name": "greptile",

"params": { "GreptileToken": "...", "GithubToken": "..." }

}

]

}

```

### Requesting Context Providers

Not seeing what you want? Create an issue [here](https://github.com/continuedev/continue/issues/new?assignees=TyDunn\&labels=enhancement\&projects=\&template=feature-request-%F0%9F%92%AA.md\&title=) to request a new Context Provider.

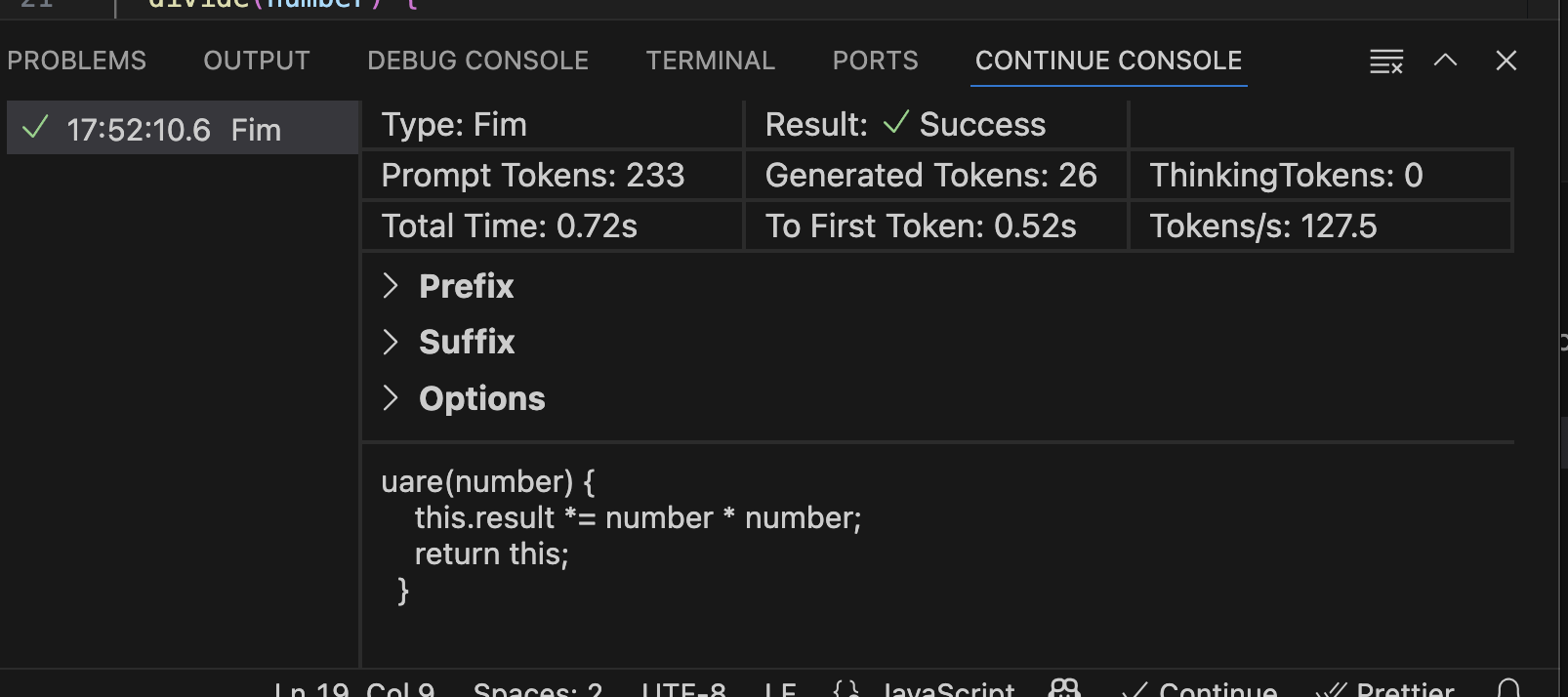

# Autocomplete

Source: https://docs.continue.dev/customize/deep-dives/autocomplete

Deep dive into Continue's autocomplete functionality and configuration

### Setting up with Codestral (recommended)

If you want to have the best autocomplete experience, we recommend using Codestral, which is available through the [Mistral API](https://console.mistral.ai/). To do this, obtain an API key and add it to your config:

[Mistral Codestral model block](https://hub.continue.dev/mistral/codestral)

```yaml title="config.yaml"

models:

- name: Codestral

provider: mistral

model: codestral-latest

apiKey:

roles:

- autocomplete

```

```json title="config.json"

{

"tabAutocompleteModel": {

"title": "Codestral",

"provider": "mistral",

"model": "codestral-latest",

"apiKey": ""

}

}

```

**Codestral API Key**: The API keys for Codestral and the general Mistral APIs

are different. If you are using Codestral, you probably want a Codestral API

key, but if you are sharing the key as a team or otherwise want to use

`api.mistral.ai`, then make sure to set `"apiBase":

"https://api.mistral.ai/v1"` in your `tabAutocompleteModel`.

### Setting up with Ollama (default)

If you'd like to run your autocomplete model locally, we recommend using Ollama. To do this, first download the latest version of Ollama from [here](https://ollama.ai). Then, run the following command to download our recommended model:

```bash

ollama run qwen2.5-coder:1.5b

```

Then, add the model to your configuration:

[Ollama Qwen 2.5 Coder 1.5B model block](https://hub.continue.dev/ollama/qwen2.5-coder-1.5b)

```yaml title="config.yaml"

models:

- name: Qwen 1.5b Autocomplete Model

provider: ollama

model: qwen2.5-coder:1.5b

roles:

- autocomplete

```

```json title="config.json"

{

"tabAutocompleteModel": {

"title": "Qwen 1.5b Autocomplete Model",

"provider": "ollama",

"model": "qwen2.5-coder:1.5b",

}

}

```

Once the model has been downloaded, you should begin to see completions in VS Code.

NOTE: Typically, thinking-type models are not recommended as they generate more slowly and are not suitable for scenarios that require speed.

However, if you use any thinking-switchable models, you can configure these models for autocomplete functions by turning off the thinking mode.

For example:

```yaml title="config.yaml"

models:

- name: Qwen3 without Thinking for Autocomplete

provider: ollama

model: qwen3:4b # qwen3 is a thinking-switchable model

roles:

- autocomplete

requestOptions:

extraBodyProperties:

think: false # turning off the thinking

```

Then, in the continue panel, select this model as the default model for autocomplete.

## Configuration Options

### Hub Autocomplete models

Explore autocomplete model configurations on [the hub](https://hub.continue.dev/explore/models?roles=autocomplete)

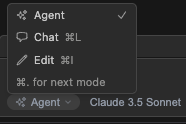

### Autocomplete User Settings

{/* - `Use autocomplete cache`: If on, caches completions */}

The following settings can be configured for autocompletion in the IDE extension [User Settings Page](../settings.md):

* `Multiline Autocompletions`: Controls multiline completions for autocomplete. Can be set to `always`, `never`, or `auto`. Defaults to `auto`

* `Disable autocomplete in files`: List of comma-separated glob pattern to disable autocomplete in matching files. E.g., "\_/.md, \*/.txt"

### `config.json` Configuration

The `config.json` configuration format (deprecated) offers further configuration options through `tabAutocompleteOptions`. See the [JSON Reference](/reference) for more details.

## FAQs

### I want better completions, should I use GPT-4?

Perhaps surprisingly, the answer is no. The models that we suggest for autocomplete are trained with a highly specific prompt format, which allows them to respond to requests for completing code (see examples of these prompts [here](https://github.com/continuedev/continue/blob/main/core/autocomplete/templating/AutocompleteTemplate.ts)). Some of the best commercial models like GPT-4 or Claude are not trained with this prompt format, which means that they won't generate useful completions. Luckily, a huge model is not required for great autocomplete. Most of the state-of-the-art autocomplete models are no more than 10b parameters, and increasing beyond this does not significantly improve performance.

### I'm not seeing any completions

Follow these steps to ensure that everything is set up correctly:

1. Make sure you have the "Enable Tab Autocomplete" setting checked (in VS Code, you can toggle by clicking the "Continue" button in the status bar, and in JetBrains by going to Settings -> Tools -> Continue).

2. Make sure you have downloaded Ollama.

3. Run `ollama run qwen2.5-coder:1.5b` to verify that the model is downloaded.

4. Make sure that any other completion providers are disabled (e.g. Copilot), as they may interfere.

5. Check the output of the logs to find any potential errors: cmd/ctrl + shift + P -> "Toggle Developer Tools" -> "Console" tab in VS Code, \~/.continue/logs/core.log in JetBrains.

6. Check VS Code settings to make sure that `"editor.inlineSuggest.enabled"` is set to `true` (use cmd/ctrl + , then search for this and check the box)

7. If you are still having issues, please let us know in our [Discord](https://discord.gg/vapESyrFmJ) and we'll help as soon as possible.

### Completions are only ever single-line

To ensure that you receive multi-line completions, you can set `"multilineCompletions": "always"` in `tabAutocompleteOptions`. By default, it is `"auto"`. If you still find that you are only seeing single-line completions, this may be because some models tend to produce shorter completions when starting in the middle of a file. You can try temporarily moving text below your cursor out of your active file, or switching to a larger model.

### Can I configure a "trigger key" for autocomplete?

Yes, in VS Code, if you don't want to be shown suggestions automatically you can:

1. Set `"editor.inlineSuggest.enabled": false` in VS Code settings to disable automatic suggestions

2. Open "Keyboard Shortcuts" (cmd/ctrl+k, cmd/ctrl+s) and search for `editor.action.inlineSuggest.trigger`

3. Click the "+" icon to add a new keybinding

4. Press the key combination you want to use to trigger suggestions (e.g. cmd/ctrl + space)

5. Now whenever you want to see a suggestion, you can press your key binding (e.g. cmd/ctrl + space) to trigger suggestions manually

### Is there a shortcut to accept one line at a time?

This is a built-in feature of VS Code, but it's just a bit hidden. Follow these settings to reassign the keyboard shortcuts in VS Code:

1. Press Ctrl+Shift+P, type the command: `Preferences: Open Keyboard Shortcuts`, and enter the keyboard shortcuts settings page.

2. Search for `editor.action.inlineSuggest.acceptNextLine`.

3. Set the key binding to Tab.

4. Set the trigger condition (when) to `inlineSuggestionVisible && !editorReadonly`.

This will make multi-line completion (including continue and from VS Code built-in or other plugin snippets) still work, and you will see multi-line completion. However, Tab will only fill in one line at a time. Any unnecessary code can be canceled with Esc.

If you need to apply all the code, just press Tab multiple times.

### How to turn off autocomplete

#### VS Code

Click the "Continue" button in the status panel at the bottom right of the screen. The checkmark will become a "cancel" symbol and you will no longer see completions. You can click again to turn it back on.

Alternatively, open VS Code settings, search for "Continue" and uncheck the box for "Enable Tab Autocomplete".

You can also use the default shortcut to disable autocomplete directly using a chord: press and hold ctrl/cmd + K (continue holding ctrl/cmd) and press ctrl/cmd + A. This will turn off autocomplete without navigating through settings.

#### JetBrains

Open Settings -> Tools -> Continue and uncheck the box for "Enable Tab Autocomplete".

#### Feedback

If you're turning off autocomplete, we'd love to hear how we can improve! Please let us know in our [Discord](https://discord.gg/vapESyrFmJ) or file an issue on GitHub.

# Configuration

Source: https://docs.continue.dev/customize/deep-dives/configuration

Configuration

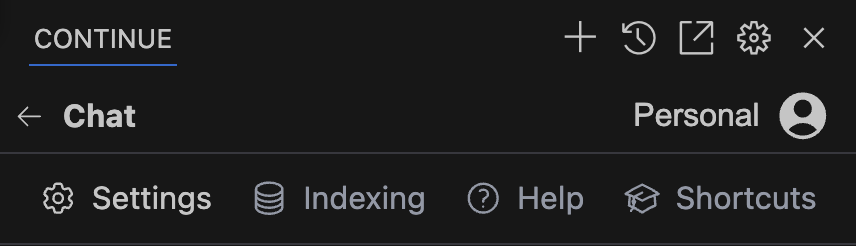

You can easily access your assistant configuration from the Continue Chat sidebar. Open the sidebar by pressing cmd/ctrl + L (VS Code) or cmd/ctrl + J (JetBrains) and click the Assistant selector above the main chat input. Then, you can hover over an assistant and click the `new window` (hub assistants) or `gear` (local assistants) icon.

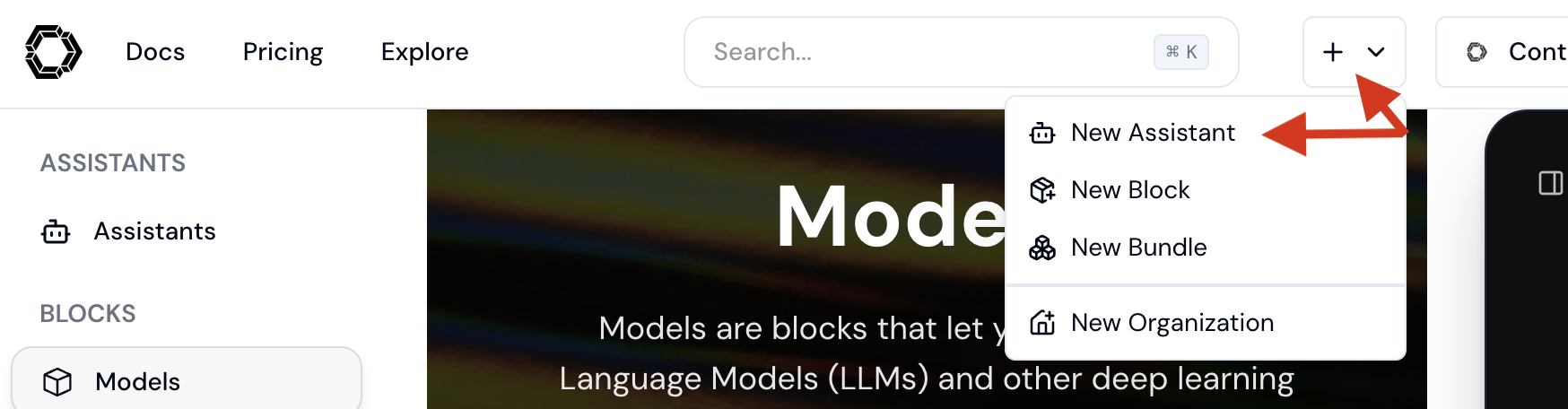

## Hub Assistants

Hub Assistants can be managed in [the Hub](https://hub.continue.dev). See [Editing an Assistant](../../hub/assistants/edit-an-assistant.md)

## YAML Config

Local user-level configuration is stored and can be edited in your home directory in `config.yaml`:

* `~/.continue/config.yaml` (MacOS / Linux)

* `%USERPROFILE%\.continue\config.yaml` (Windows)

To open this `config.yaml`, you need to open the assistants dropdown in the top-right portion of the chat input. On that dropdown beside the "Local Assistant" option, select the cog icon. It will open the local `config.yaml`.

When editing this file, you can see the available options suggested as you type, or check the reference below. When you save a config file from the IDE, Continue will automatically refresh to take into account your changes. A config file is automatically created the first time you use Continue, and always automatically generated with default values if it doesn't exist.

See the full reference for `config.yaml` [here](/reference).

## Deprecated configuration methods

View the `config.json` migration guide [here](../yaml-migration.mdx)

* [`config.json`](/reference) - The original configuration format which is stored in a file at the same location as `config.yaml`

* [`.continuerc.json`](#continuercjson) - Workspace-level configuration

* [`config.ts`](#configts) - Advanced configuration (probably unnecessary) - a TypeScript file in your home directory that can be used to programmatically modify (*merged*) the `config.json` schema:

* `~/.continue/config.ts` (MacOS / Linux)

* `%USERPROFILE%\.continue\config.ts` (Windows)

### `.continuerc.json`

The format of `.continuerc.json` is the same as `config.json`, plus one *additional* property `mergeBehavior`, which can be set to either "merge" or "overwrite". If set to "merge" (the default), `.continuerc.json` will be applied on top of `config.json` (arrays and objects are merged). If set to "overwrite", then every top-level property of `.continuerc.json` will overwrite that property from `config.json`.

Example

```json title=".continuerc.json"

{

"tabAutocompleteOptions": {

"disable": true

},

"mergeBehavior": "overwrite"

}

```

### `config.ts`

`config.yaml` or `config.json` can handle the vast majority of necessary configuration, so we recommend using it whenever possible. However, if you need to programmatically extend Continue configuration, you can use a `config.ts` file, placed at `~/.continue/config.ts` (MacOS / Linux) or `%USERPROFILE%\.continue\config.ts` (Windows).

`config.ts` must export a `modifyConfig` function, like:

```ts title="config.ts"

export function modifyConfig(config: Config): Config {

config.slashCommands?.push({

name: "commit",

description: "Write a commit message",

run: async function* (sdk) {

// The getDiff function takes a boolean parameter that indicates whether

// to include unstaged changes in the diff or not.

const diff = await sdk.ide.getDiff(false); // Pass false to exclude unstaged changes

for await (const message of sdk.llm.streamComplete(

`${diff}\n\nWrite a commit message for the above changes. Use no more than 20 tokens to give a brief description in the imperative mood (e.g. 'Add feature' not 'Added feature'):`,

new AbortController().signal,

{

maxTokens: 20,

},

)) {

yield message;

}

},

});

return config;

}

```

# Development Data

Source: https://docs.continue.dev/customize/deep-dives/development-data

Collecting data on how you build software

When you use Continue, you automatically collect data on how you build software. By default, this development data is saved to `.continue/dev_data` on your local machine.

You can read more about how development data is generated as a byproduct of LLM-aided development and why we believe that you should start collecting it now: [It’s time to collect data on how you build software](https://blog.continue.dev/its-time-to-collect-data-on-how-you-build-software)

## Custom Data Destinations

You can also configure custom destinations for your data, including remote HTTP endpoints and local file directories.

For hub assistants, data destinations are configured in `data` blocks. Visit the hub to [explore example data blocks](https://hub.continue.dev/explore/data) or [create your own](https://hub.continue.dev/new?type=block\&blockType=data).

See more details about adding `data` blocks to your configuration files in the [YAML specification](/reference#data)

When sending development data to your own HTTP endpoint, it will receive an event JSON blob at the given `schema` version. You can view event names, schema versions, and fields [here in the source code](https://github.com/continuedev/continue/tree/main/packages/config-yaml/src/schemas/data).

# MCP

Source: https://docs.continue.dev/customize/deep-dives/mcp

MCP use and customization

As AI systems get better, they're still held back by their training data and

can't access real-time information or specialized tools. The [Model Context

Protocol](https://modelcontextprotocol.io/introduction) (MCP) fixes this by

letting AI models connect with outside data sources, tools, and environments.

This allows smooth sharing of information and abilities between AI systems and

the wider digital world. This standard, created by Anthropic to bring together

prompts, context, and tool use, is key for building truly useful AI experiences

that can be set up with custom tools.

## How it works

Currently custom tools can be configured using the Model Context

Protocol standard to unify prompts, context, and tool use.

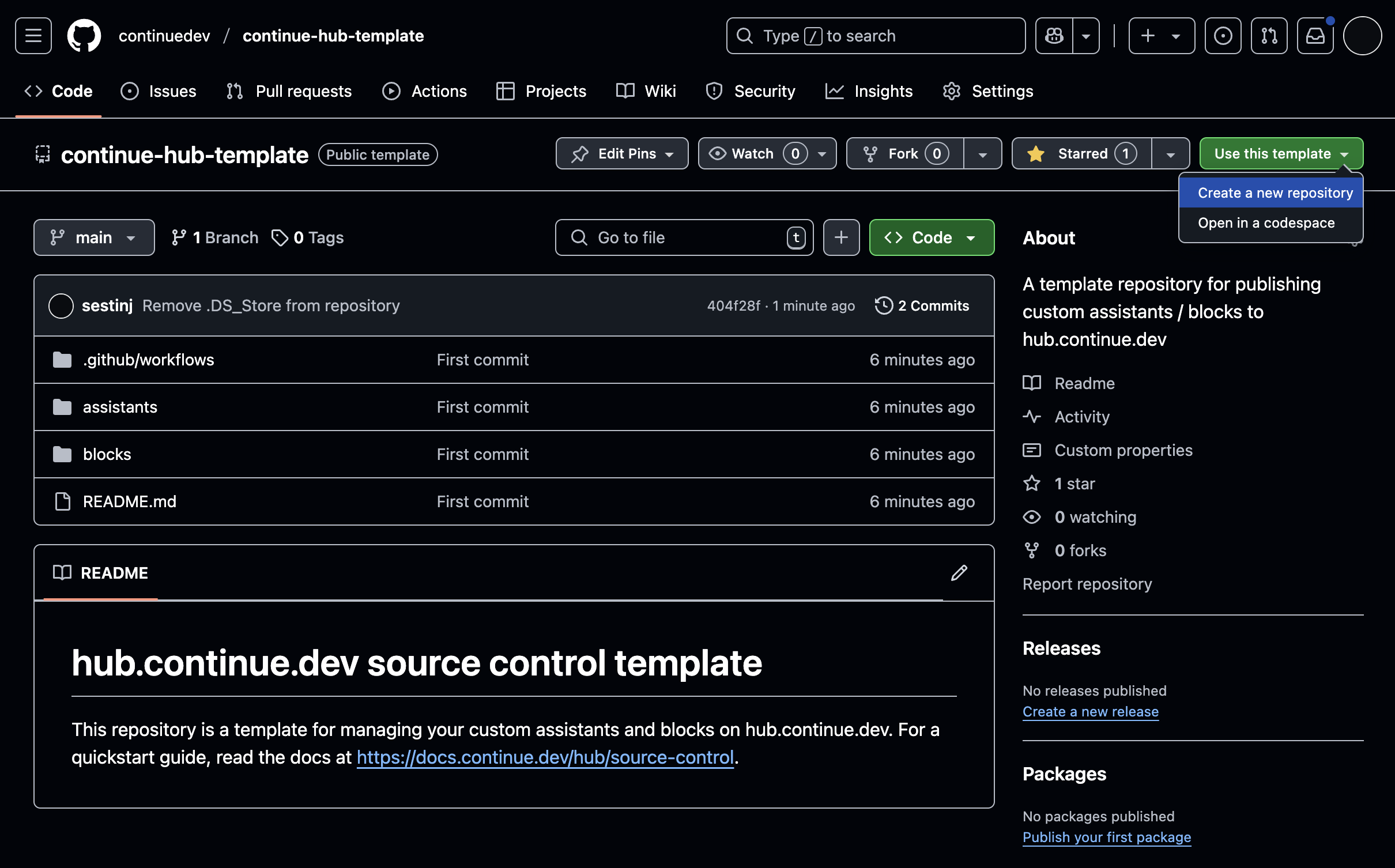

MCP Servers can be added to hub Assistants using `mcpServers` blocks. You can

explore available MCP server blocks

[here](https://hub.continue.dev/explore/mcp).

MCP can only be used in the **agent** mode.

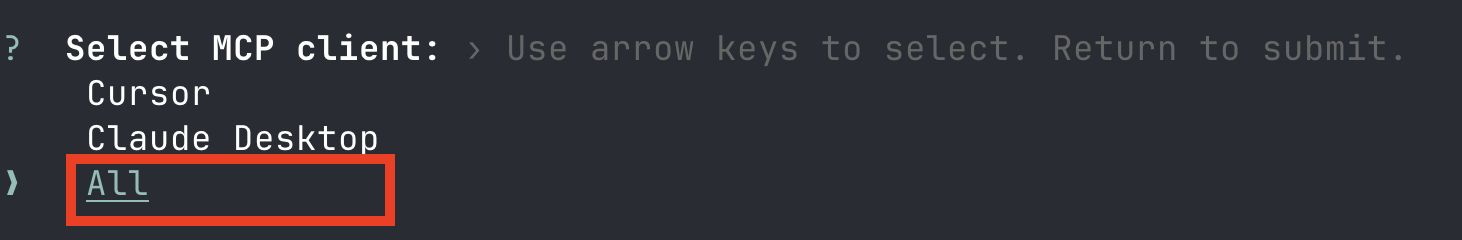

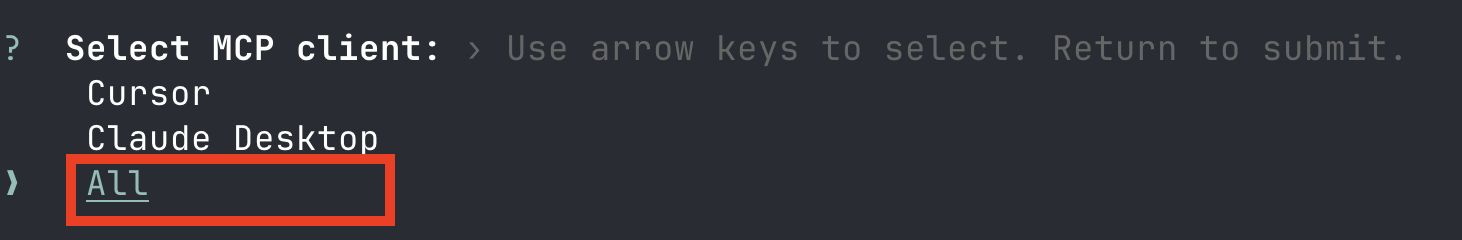

## Quick Start

Below is a quick example of setting up a new MCP server for use in your assistant:

1. Create a folder called `.continue/mcpServers` at the top level of your workspace

2. Add a file called `playwright-mcp.yaml` to this folder.

3. Write the following contents to `playwright-mcp.yaml` and save.

```yaml title=".continue/mcpServers/playwright-mcp.yaml"

name: Playwright mcpServer

version: 0.0.1

schema: v1

mcpServers:

- name: Browser search

command: npx

args:

- "@playwright/mcp@latest"

```

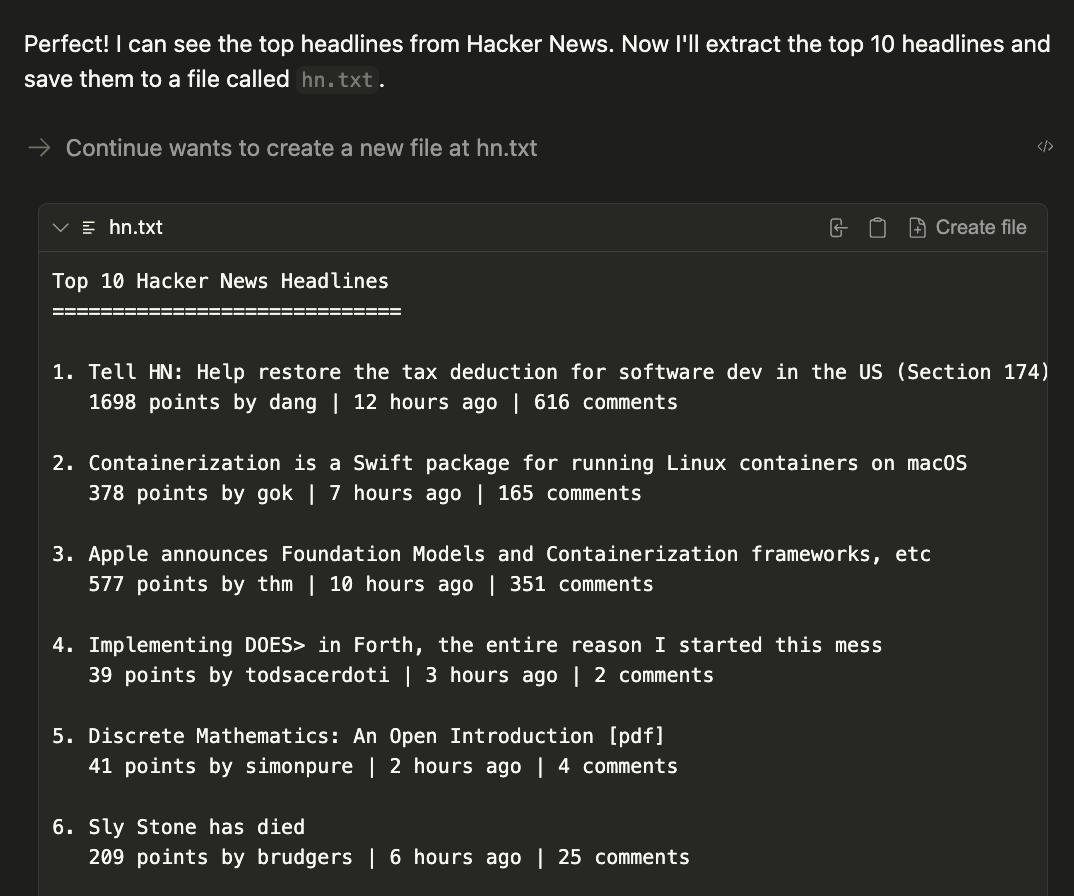

Now test your MCP server by prompting the following command:

```

Open the browser and navigate Hacker News. Save the top 10 headlines in a hn.txt file.

```

The result will be a generated file called `hn.txt` in the current working directory.

## Continue Documentation Search

You can set up an MCP server to search the Continue documentation directly from your agent. This is particularly useful for getting help with Continue configuration and features.

For complete setup instructions, troubleshooting, and usage examples, see the [Continue MCP Reference](/reference/continue-mcp).

## Using MCP Servers

To set up your own MCP server, read the [MCP

quickstart](https://modelcontextprotocol.io/quickstart) and then [create an

`mcpServers`

block](https://hub.continue.dev/new?type=block\&blockType=mcpServers) or add a local MCP

server block to your [config file](./configuration.md):

```yaml title="config.yaml"

mcpServers:

- name: SQLite MCP

command: npx

args:

- "-y"

- "mcp-sqlite"

- "/path/to/your/database.db"

```

```json title="config.json"

{

"experimental": {

"modelContextProtocolServers": [

{

"transport": {

"type": "stdio",

"command": "uvx",

"args": ["mcp-server-sqlite", "--db-path", "/path/to/your/database.db"]

}

}

]

}

}

```

### Syntax

MCP blocks follow the established syntax for blocks, with a few additional properties specific to MCP servers.

* `name` (**required**): A display name for the MCP server.

* `command` (**required**): The command to run to start the MCP server.

* `type` (optional): The type of the MCP server: `sse`, `stdio`, `streamable-http`

* `args` (optional): Arguments to pass to the command.

* `env` (optional): Secrets to be injected into the command as environment variables.

### Transport Types

MCP now supports remote server connections through HTTP-based transports, expanding beyond the traditional local stdio transport method. This enables integration with cloud-hosted MCP servers and distributed architectures.

#### Server-Sent Events (SSE) Transport

For real-time streaming communication, use the SSE transport:

```yaml

mcpServers:

- name: Name

type: sse

url: https://....

```

#### Standard Input/Output (stdio)

For local MCP servers that communicate via standard input and output:

```yaml

mcpServers:

- name: Name

type: stdio

command: npx

args:

- "@modelcontextprotocol/server-sqlite"

- "/path/to/your/database.db"

```

#### Streamable HTTP Transport

For standard HTTP-based communication with streaming capabilities:

```yaml

mcpServers:

- name: Name

type: streamable-http

url: https://....

```

These remote transport options allow you to connect to MCP servers hosted on remote infrastructure, enabling more flexible deployment architectures and shared server resources across multiple clients.

For detailed information about transport mechanisms and their use cases, refer to the official MCP documentation on [transports](https://modelcontextprotocol.io/docs/concepts/transports#server-sent-events-sse).

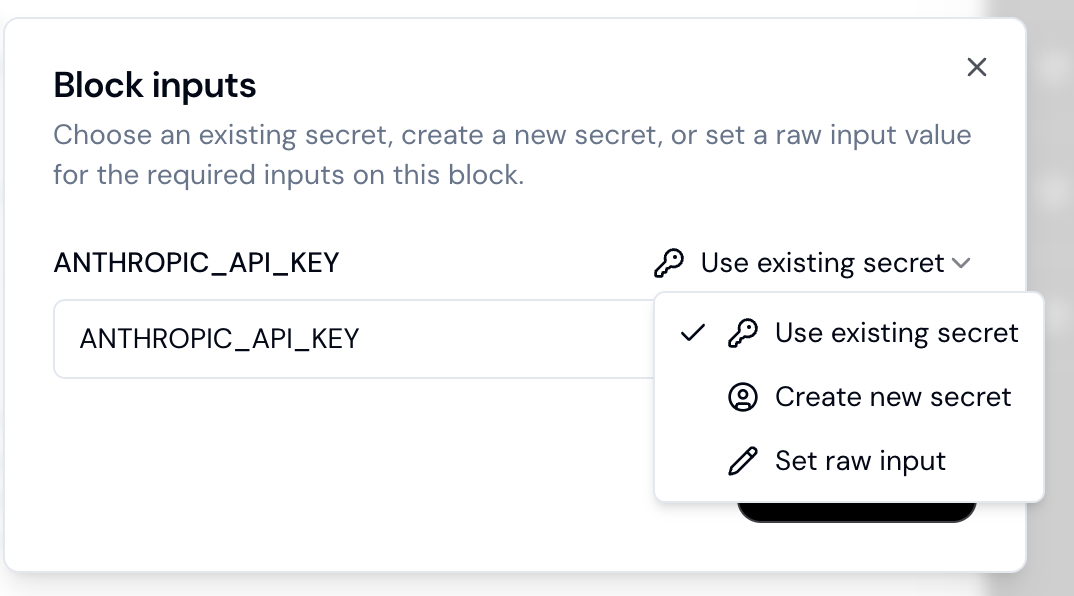

### Working with Secrets

With some MCP servers you will need to use API keys or other secrets. You can leverage locally stored environments secrets

as well as access hosted secrets in the Continue Hub. To leverage Hub secrets, you can use the `inputs` property in your MCP env block instead of `secrets`.

```yaml

mcpServers:

- name: Supabase MCP

command: npx

args:

- -y

- "@supabase/mcp-server-supabase@latest"

- --access-token

- ${{ secrets.SUPABASE_TOKEN }}

env:

SUPABASE_TOKEN: ${{ secrets.SUPABASE_TOKEN }}

- name: GitHub

command: npx

args:

- "-y"

- "@modelcontextprotocol/server-github"

env:

GITHUB_PERSONAL_ACCESS_TOKEN: ${{ secrets.GITHUB_PERSONAL_ACCESS_TOKEN }}

```

# Prompts

Source: https://docs.continue.dev/customize/deep-dives/prompts

Prompts are reusable instructions that can be referenced at any time during chat. They are especially useful as context for repetitive and/or complex tasks.

Prompts were previously defined in a `.prompt` file format, but for

consistency we now recommend using the same Markdown format as

[rules](./rules.mdx) and adding `alwaysApply: false` to the frontmatter so

that they are manually triggered.

### Quick Start

Below is a quick example of setting up a prompt file:

1. Create a folder called `.continue/rules` at the top level of your workspace

2. Add a file called `review-prompt.md` to this folder.

3. Write the following contents to `review-prompt.md` and save.

```md title="review-prompt.md"

---

name: Redux best practices review

alwaysApply: false

---

Review the currently open file for adherence to Redux best practices, as explained in their style guide at https://redux.js.org/style-guide/.

```

Now to use this prompt, you can open Chat, type /, select the prompt, and type out any additional instructions you'd like to add.

## Further Examples

Below are more examples to get you started. You can also visit the Hub to [explore prompts](https://hub.continue.dev/explore/prompts) or [create your own](https://hub.continue.dev/new?type=block\&blockType=prompts).

### Security review

```md title="security-review.md"

---

name: Security best practices review

alwaysApply: false

---

Review the changes in the current git diff for these security best practices:

- Does the architecture follow security-by-design principles?

- Are there potential security vulnerabilities in the system design?

- Is sensitive data handled appropriately throughout the lifecycle?

```

### Pull in commonly used files for tasks

```md title="typeorm-entity-generator.md"

---

name: Generate a new TypeORM entity

alwaysApply: false

---

Referencing `src/db/dataSource.ts` and `src/db/entity/SampleEntity.ts`, generate a new TypeORM entity based on the following requirements:

```

# Rules

Source: https://docs.continue.dev/customize/deep-dives/rules

Rules are used to provide instructions to the model for Chat, Edit, and Agent requests.

Rules provide instructions to the model for [Chat](../../features/chat/quick-start), [Edit](../../features/edit/quick-start), and [Agent](../../features/agent/quick-start) requests.

Rules are not included in [autocomplete](./autocomplete.mdx) or

[apply](../model-roles/apply.mdx).

## How it works

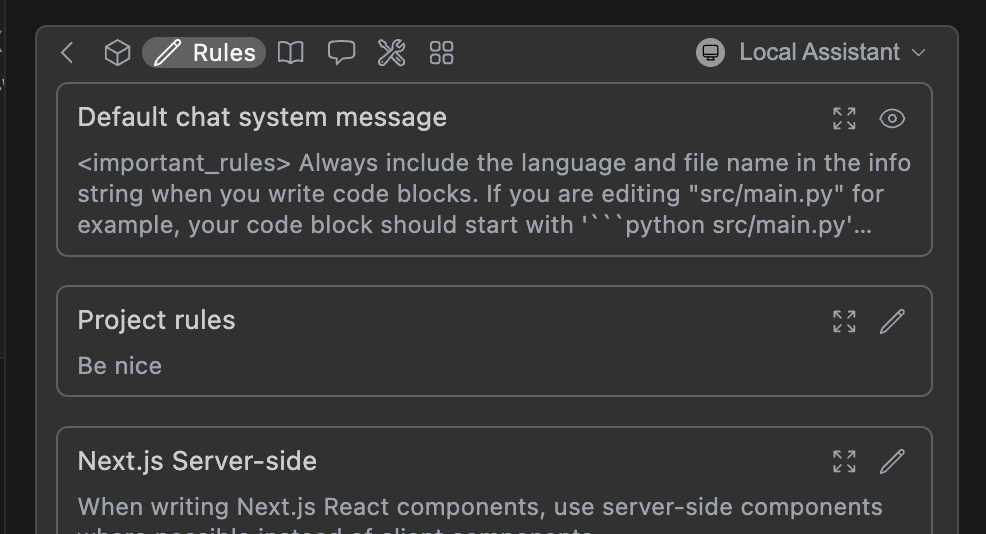

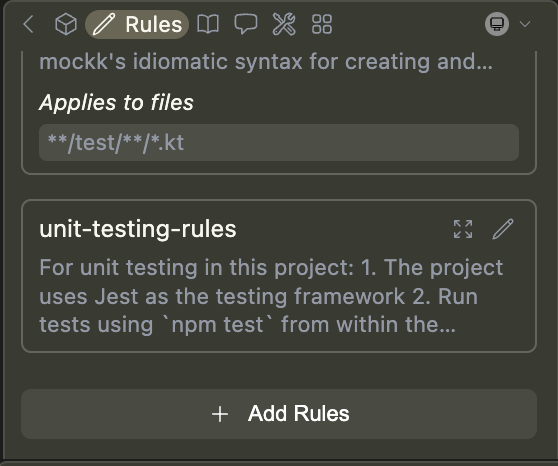

You can view the current rules by clicking the pen icon above the main toolbar:

To form the system message, rules are joined with new lines, in the order they appear in the toolbar. This includes the base chat system message ([see below](#chat-system-message)).

## Quick Start

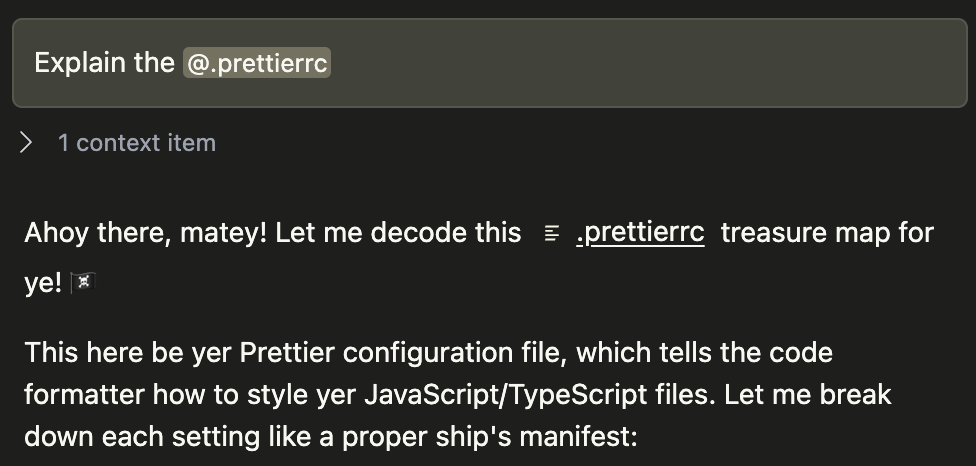

Below is a quick example of setting up a new rule file:

1. Create a folder called `.continue/rules` at the top level of your workspace

2. Add a file called `pirates-rule.md` to this folder.

3. Write the following contents to `pirates-rule.md` and save.

```md title=".continue/rules/pirates-rule.md"

---

name: Pirate rule

---

- Talk like a pirate.

```

Now test your rules by asking a question about a file in chat.

## Creating rules blocks

Rules can be added locally using the "Add Rules" button while viewing the Local Assistant's rules.

**Automatically create local rule blocks**: When in Agent mode, you can prompt the agent to create a rule for you using the `create_rule_block` tool if enabled.

For example, you can say "Create a rule for this", and a rule will be created for you in `.continue/rules` based on your conversation.

Rules can also be added to an Assistant on the Continue Hub.

Explore available rules [here](https://hub.continue.dev), or [create your own](https://hub.continue.dev/new?type=block\&blockType=rules) in the Hub.

### Syntax

Rules were originally defined in YAML format (demonstrated below), but we

introduced Markdown for easier editing. While both are still supported, we

recommend Markdown.

Rules blocks can be simple text, written in YAML configuration files, or as Markdown (`.md`) files. They can have the following properties:

* `name` (**required** for YAML): A display name/title for the rule

* `globs` (optional): When files are provided as context that match this glob pattern, the rule will be included. This can be either a single pattern (e.g., `"**/*.{ts,tsx}"`) or an array of patterns (e.g., `["src/**/*.ts", "tests/**/*.ts"]`).

* `regex` (optional): When files are provided as context and their content matches this regex pattern, the rule will be included. This can be either a single pattern (e.g., `"^import .* from '.*';$"`) or an array of patterns (e.g., `["^import .* from '.*';$", "^export .* from '.*';$"]`).

* `description` (optional): A description for the rule. Agents may read this description when `alwaysApply` is false to determine whether the rule should be pulled into context.

* `alwaysApply`: Determines whether the rule is always included. Behavior is described below:

* `true`: Always included, regardless of file context

* `false`: Included if globs exist AND match file context, or the agent decides to pull the rule into context based on its description

* `undefined` (default behavior): Included if no globs exist OR globs exist and match

```md title="doc-standards.md"

---

name: Documentation Standards

globs: docs/**/*.{md,mdx}

alwaysApply: false

description: Standards for writing and maintaining Continue Docs

---

# Continue Docs Standards

- Follow Docusaurus documentation standards

- Include YAML frontmatter with title, description, and keywords

- Use consistent heading hierarchy starting with h2 (##)

- Include relevant Admonition components for tips, warnings, and info

- Use descriptive alt text for images

- Include cross-references to related documentation

- Reference other docs with relative paths

- Keep paragraphs concise and scannable

- Use code blocks with appropriate language tags

```

```yaml title="doc-standards.yaml"

name: Documentation Standards

version: 1.0.0

schema: v1

rules:

- name: Documentation Standards

globs: docs/**/*.{md,mdx}

alwaysApply: false

rule: >

- Follow Docusaurus documentation standards

- Include YAML frontmatter with title, description, and keywords

- Use consistent heading hierarchy starting with h2 (##)

- Include relevant Admonition components for tips, warnings, and info

- Use descriptive alt text for images

- Include cross-references to related documentation

- Reference other docs with relative paths

- Keep paragraphs concise and scannable

- Use code blocks with appropriate language tags

```

### `.continue/rules` folder

You can create project-specific rules by adding a `.continue/rules` folder to the root of your project and adding new rule files.

Rules files are loaded in lexicographical order, so you can prefix them with numbers to control the order in which they are applied. For example: `01-general.md`, `02-frontend.md`, `03-backend.md`.

### Example: TypeScript Rules

```md title=".continue/rules/typescript.md"

---

name: TypeScript Best Practices

globs: ["**/*.ts", "**/*.tsx"]

---

# TypeScript Rules

- Always use TypeScript interfaces for object shapes

- Use type aliases sparingly, prefer interfaces

- Include proper JSDoc comments for public APIs

- Use strict null checks

- Prefer readonly arrays and properties where possible

- modularize components into smaller, reusable pieces

```

### Chat System Message

Continue includes a simple default system message for [Chat](../../features/chat/quick-start) and [Agent](../../features/agent/quick-start) requests, to help the model provide reliable codeblock formats in its output.

This can be viewed in the rules section of the toolbar (see above), or in the source code [here](https://github.com/continuedev/continue/blob/main/core/llm/constructMessages.ts#L4).

Advanced users can override this system message for a specific model if needed by using `chatOptions.baseSystemMessage`. See the [`config.yaml` reference](/reference#models).

### `.continuerules`

`.continuerules` will be deprecated in a future release. Please use the

`.continue/rules` folder instead.

You can create project-specific rules by adding a `.continuerules` file to the root of your project. This file is raw text and its full contents will be used as rules.

# Slash commands

Source: https://docs.continue.dev/customize/deep-dives/slash-commands

Shortcuts that can be activated by prefacing your input with '/'

Slash commands are shortcuts that can be activated by typing '/' in a chat session (press cmd/ctrl + L (VS Code) or cmd/ctrl + J (JetBrains)), and selecting from the dropdown.

Slash commands can be combined with additional instructions, including [context providers](../custom-providers.mdx) or highlighted code.

## Prompts

### Assistant prompt blocks

The easiest way to add a slash command is by adding [`prompt` blocks](../../hub/blocks/block-types.md#prompts) to your assistant, which show up as slash commands in [Chat](../../features/chat/how-it-works.mdx).

### Prompt files

It is also possible to write your own slash command by defining a “.prompt file.” Prompt files can be as simple as a text file, but also include templating so that you can refer to files, URLs, highlighted code, and more.

Learn more about prompt files [here](./prompts.md)

### MCP Server prompts

Any prompts provided by [Model Context Protocol](https://modelcontextprotocol.io/introduction) servers are also accessible in chat as Slash Commands. See the [MCP Server Deep Dive](./mcp.mdx) for more details.

# VS Code Actions

Source: https://docs.continue.dev/customize/deep-dives/vscode-actions

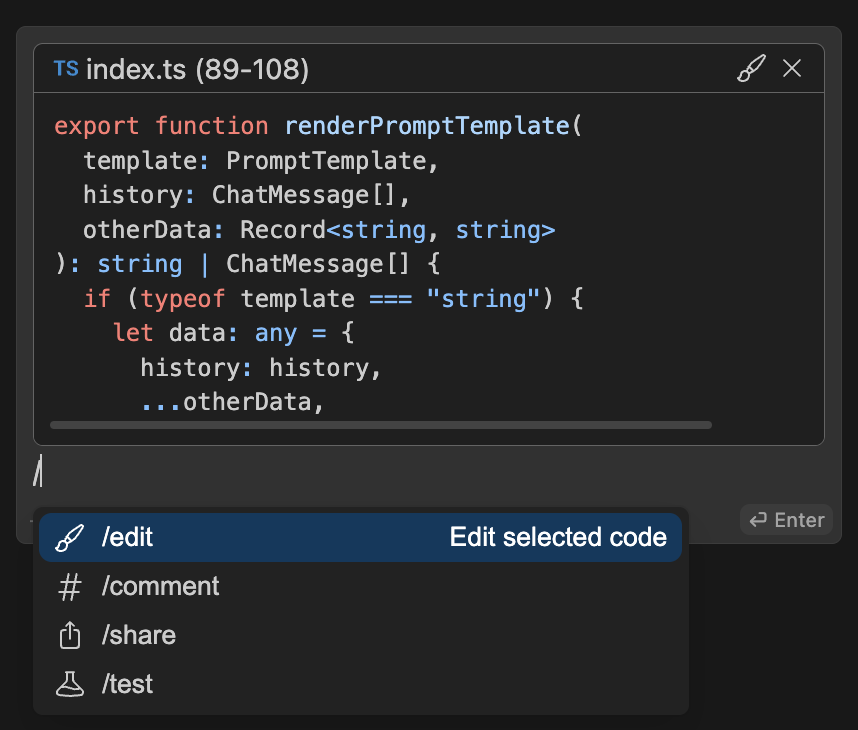

### Other triggers for Actions (VS Code)

Currently all of these are only available in VS Code

To make common use cases even more accessible, we provide a handful of other ways to invoke actions in VS Code.

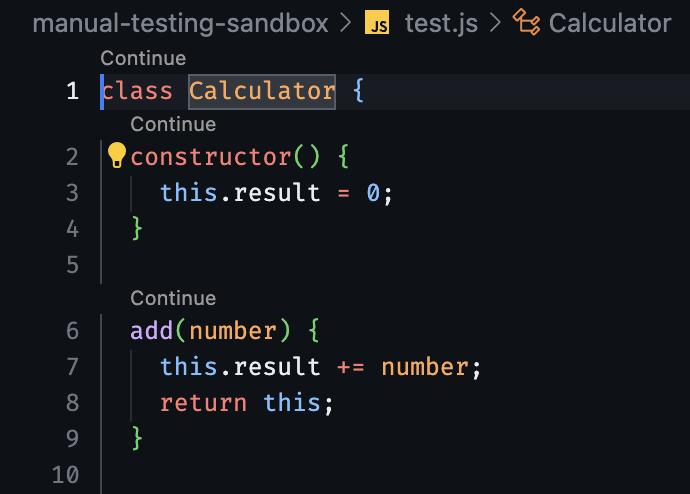

## Quick actions

Quick Actions are displayed as buttons above top-level classes and functions in your source code, letting you invoke actions with one click. They will edit that class or function, but nothing outside of it. They can also be customized with [.prompt files](./prompts.md) to perform custom actions.

By default, quick actions are disabled, but can be enabled with the “Continue: Enable Quick Actions” in VS Code settings.

## Right click actions

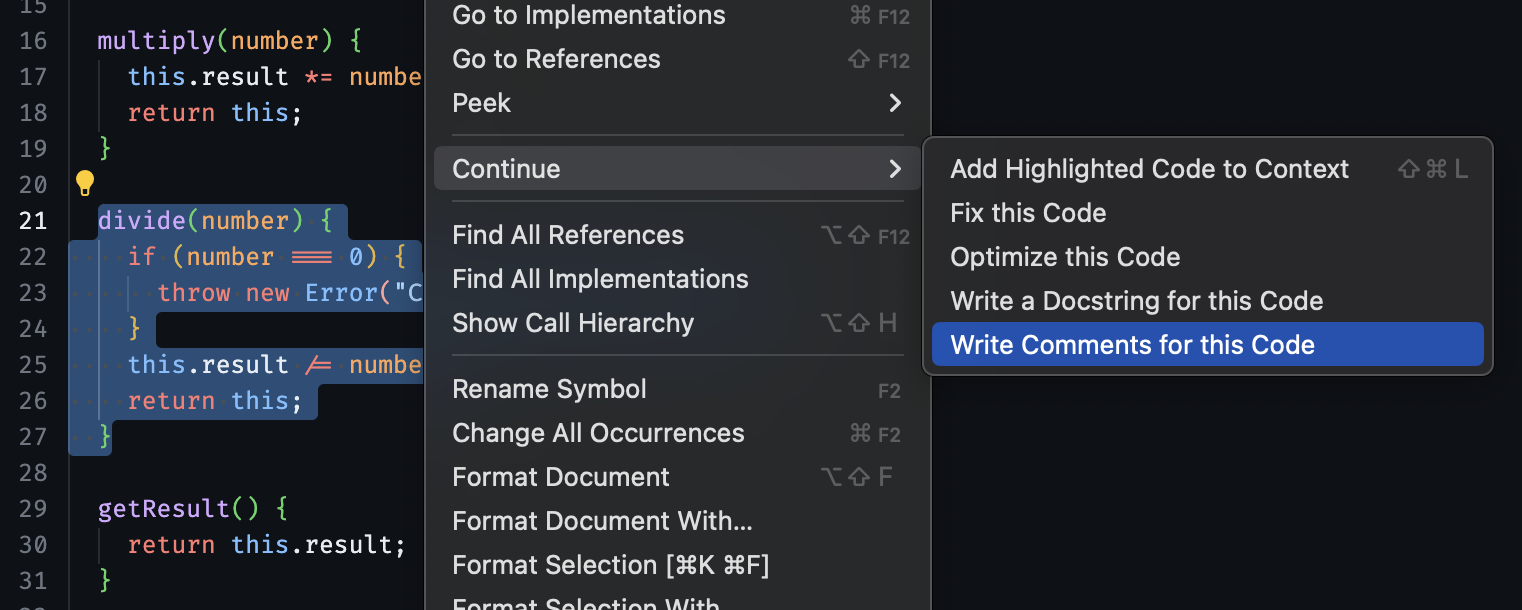

Right click actions let you highlight a desired region of code, right click, and then select an action from the dropdown menu.

The highlighted code you’ve selected will be included in your prompt alongside a pre-written set of instructions depending on the selected action. This is the only section of code that the model will attempt to edit.

Right click actions that generate inline diffs use the same prompt and response processing logic as [Edit](../../features/edit/how-it-works.mdx).

## Debug action

The debug action is a special built-in keyboard shortcut in the VS Code extension. Use cmd/ctrl + shift + R to instantly copy the contents of the most recent terminal output into the chat sidebar and get debugging advice. There is no additional, non-visible information sent to the language model.

```

I got the following error, can you please help explain how to fix it?

[ERROR_MESSAGE]

```

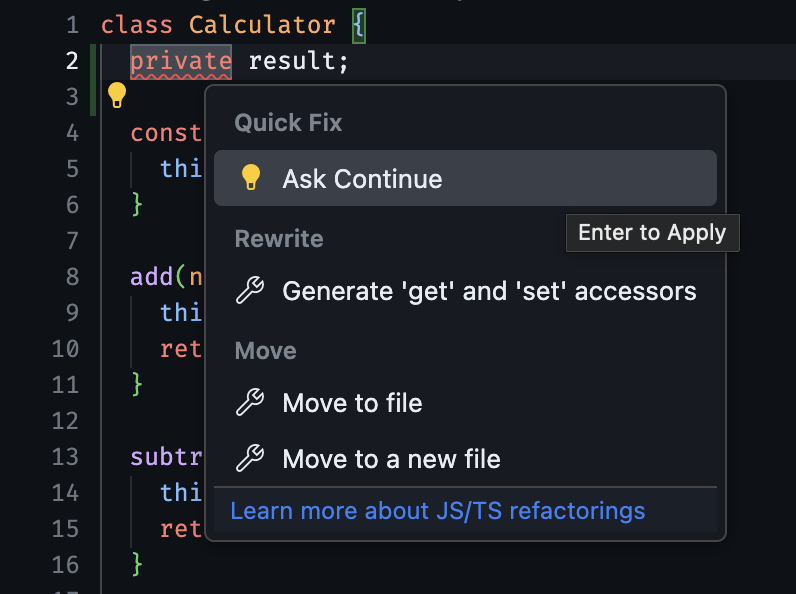

## Quick fixes

Whenever you see red/yellow underlines in your code indicating errors, you can place your cursor nearby and VS Code will display a lightbulb icon. Either clicking the lightbulb or using the keyboard shortcut cmd/ctrl + . will show a dropdown menu of quick fixes. One of these will be the “Ask Continue” action. Either click or use cmd/ctrl + . again and Continue will attempt to help solve the problem.

Similarly to the debug action, quick actions transparently inject a prompt into the chat window. When you select “Ask Continue”, the 3 lines above and below the error are sent to the chat followed by the question “How do I fix the following problem in the above code?: \[ERROR\_MESSAGE]”.

# Cloudflare

Source: https://docs.continue.dev/customize/model-providers/more/cloudflare

Cloudflare Workers AI can be used for both chat and tab autocompletion in Continue. Here is an example of Cloudflare Workers AI configuration:

```yaml title="config.yaml"

models:

- name: Llama 3 8B

provider: cloudflare

apiKey:

model: "@cf/meta/llama-3-8b-instruct"

contextLength: 2400

defaultCompletionOptions:

maxTokens: 500

roles:

- chat

env:

accountId: YOUR CLOUDFLARE ACCOUNT ID

- name: DeepSeek Coder 6.7b Instruct

provider: cloudflare

apiKey:

model: "@hf/thebloke/deepseek-coder-6.7b-instruct-awq"

contextLength: 2400

defaultCompletionOptions:

maxTokens: 500

roles:

- chat

env:

accountId: YOUR CLOUDFLARE ACCOUNT ID

- name: DeepSeek 7b

provider: cloudflare

apiKey:

model: "@hf/thebloke/deepseek-coder-6.7b-base-awq"

roles:

- autocomplete

env:

accountId: YOUR CLOUDFLARE ACCOUNT ID

```

```json title="config.json"

{

"models": [

{

"title": "Llama 3 8B",

"provider": "cloudflare",

"accountId": "YOUR CLOUDFLARE ACCOUNT ID",

"apiKey": "YOUR CLOUDFLARE API KEY",

"contextLength": 2400,

"completionOptions": {

"maxTokens": 500

},

"model": "@cf/meta/llama-3-8b-instruct"

},

{

"title": "DeepSeek Coder 6.7b Instruct",

"provider": "cloudflare",

"accountId": "YOUR CLOUDFLARE ACCOUNT ID",

"apiKey": "YOUR CLOUDFLARE API KEY",

"contextLength": 2400,

"completionOptions": {

"maxTokens": 500

},

"model": "@hf/thebloke/deepseek-coder-6.7b-instruct-awq"

}

],

"tabAutocompleteModel": {

"title": "DeepSeek 7b",

"provider": "cloudflare",

"accountId": "YOUR CLOUDFLARE ACCOUNT ID",

"apiKey": "YOUR CLOUDFLARE API KEY",

"model": "@hf/thebloke/deepseek-coder-6.7b-base-awq"

}

}

```

Visit the [Cloudflare dashboard](https://dash.cloudflare.com/) to [create an API key](https://developers.cloudflare.com/fundamentals/api/get-started/create-token/).

Review [available models](https://developers.cloudflare.com/workers-ai/models/) on Workers AI

[View the source](https://github.com/continuedev/continue/blob/main/core/llm/llms/Cloudflare.ts)

# Cohere

Source: https://docs.continue.dev/customize/model-providers/more/cohere

Before using Cohere, visit the [Cohere dashboard](https://dashboard.cohere.com/api-keys) to create an API key.

## Chat model

We recommend configuring **Command A** as your chat model.

```yaml title="config.yaml"

models:

- name: Command A 03-2025

provider: cohere

model: command-a-03-2025

apiKey:

```

```json title="config.json"

{

"models": [

{

"title": "Command A 03-2025",

"provider": "cohere",

"model": "command-a-03-2025",

"apiKey": ""

}

]

}

```

## Autocomplete model

Cohere currently does not offer any autocomplete models.

[Click here](../../model-roles/autocomplete.md) to see a list of autocomplete model providers.

## Embeddings model

We recommend configuring **embed-v4.0** as your embeddings model.

```yaml title="config.yaml"

models:

- name: Cohere Embed v4.0

provider: cohere

model: embed-v4.0

apiKey:

roles:

- embed

```

```json title="config.json"

{

"embeddingsProvider": {

"provider": "cohere",

"model": "embed-v4.0",

"apiKey": ""

}

}

```

## Reranking model

We recommend configuring **rerank-v3.5** as your reranking model.

```yaml title="config.yaml"

models:

- name: Cohere Rerank v3.5

provider: cohere

model: rerank-v3.5

apiKey:

roles:

- rerank

```

```json title="config.json"

{

"reranker": {

"name": "cohere",

"params": {

"model": "rerank-v3.5",

"apiKey": ""

}

}

}

```

# DeepInfra

Source: https://docs.continue.dev/customize/model-providers/more/deepinfra

[DeepInfra](https://deepinfra.com) provides inference for open-source models at very low cost. To get started with DeepInfra, obtain your API key [here](https://deepinfra.com/dash). Then, find the model you want to use [here](https://deepinfra.com/models?type=text-generation) and copy the name of the model. Continue can then be configured to use the `DeepInfra` LLM class, like the example here:

```yaml title="config.yaml"

models:

- name: DeepInfra

provider: deepinfra

model: mistralai/Mixtral-8x7B-Instruct-v0.1

apiKey:

```

```json title="config.json"

{

"models": [

{